Project Overview

For the last two decades (and more), advocates have touted the potential benefits of XML-based workflows in scholarly publishing. XML would be the single source of truth. XML would allow multi-channel publishing. XML would give meaning to content through semantic tagging and rich metadata. XML would allow the mining of data and the linking of insights among different sources. If only we could rid ourselves of proprietary formats like MS Word documents and PDFs and start with structured content, the world would be a better place.

But, as we got further into the new millennium, the promise of XML seemed to fade. For use cases outside the document space, JSON is a lighter format for conveying semantic information, and despite XML’s mature tech stack, easier for most programmers to handle (https://www.balisage.net/Proceedings/vol26/html/StLaurent01/BalisageVol26-StLaurent01.html). Within the document space, XML authoring and editing in XML is considered just too hard. Among other issues, critics pointed out that in XML, whitespace can be meaningless, and to create a new paragraph, you cannot just hit [Enter], you must close the previous paragraph tagging and create a new element (https://contentwrangler.com/2016/02/23/why-does-xml-suck/). Efforts to put XML at the center of scholarly publishing workflows languished.

The value of XML to store and transmit semantic information was not entirely lost. Especially with the growth in importance of metadata such as funding information and persistent identifiers for everything from images to authors, the Journal Article Tag Suite (JATS) has become a vital tool in scholarly publishing. But its value has been primarily as a final delivery and archiving format. In most publishing workflows, the XML is either created at the end of the process, or its care and handling has been left to operators skilled in specialized software while content experts, the authors and editors, continue to work in Word and PDF.

However, as web-based, user-friendly XML editors were developed, new possibilities for XML-through workflows began to open, and Aries Systems saw an opportunity. Aries’ submission and peer review system Editorial Manager and its production tracking system ProduXion Manager already provided two of the necessary pieces of the puzzle: the metadata that belongs in the XML and the workflow engine necessary to assign and track tasks performed by all the different actors in the workflow. What was needed were the tools to create, edit, transform, and analyze the content.

Well, we’ve made progress. Aries worked with Fonto XML to develop a user-friendly but comprehensive JATS editing tool. We worked with Typefi to transform XML to PDF and other publication channels. We integrated Saxon to facilitate metadata exchange and quality analysis, but we still needed good XML at the beginning of the workflow. As much as we would love them to write in a structured format, authors, especially of unsolicited manuscripts, and publishers are still wedded to Word.

And not just good XML, but consistently good XML. Even with a user-friendly toolset, XML did not stop being hard. Aries could not expect editors, much less authors, to diagnose and fix problems arising from a suboptimal conversion. Given the almost absurd depth of possibility for author use and abuse of Word features, even the most sophisticated automated Word-to-XML conversion engine will have a fraction of articles it handles badly. Then what should editors do when the engine puts out as poorly constructed XML—fix the XML and compare it against the Word file to ensure nothing has been missed? Maybe they could try to figure out what the conversion didn’t like, fix it in the Word file, run it through again, and hope they got it right?

Convincing publishers to take control of their content rather than throwing it over a wall for a vendor to take care of is difficult enough. We could not expect them to also develop expertise in XML or the pitfalls of every feature in Word. Aries needed a conversion engine that quickly turns around good-quality JATS XML from the highest practical percentage of unstructured Word files but also recognizes when the results are not optimal so that the file can be diverted for manual review and remediation. Aries turned to Data Conversion Laboratory (DCL) for its support in creating this automated XML conversion engine.

Workflow Overview

DCL architected an automated workflow comprising nine key steps that the system must traverse from manuscript acceptance to delivery into Aries’ ProduXion Manager, with the goal of a 10-minute turnaround time per manuscript. It was understood by DCL and Aries that not every article would simply pass through the automated system, but also that every manuscript that did successfully pass the nine workflow steps would produce not only valid but also good XML that conforms to the Aries-defined subset DTD based on JATS 1.2. The ultimate goal is to accurately convert at least 80% of reasonably conformant Word files.

The workflow steps of each article are listed below.

-

Receive manuscript

-

Preprocess

-

Autostyle

-

Conversion

-

Confidence Analytics

-

Editorial

-

QA

-

Final packaging

-

Delivery to Aries

The system is architected to

-

invoke or bypass activities based on the nature of the content

-

maintain metadata, status, metrics, issues alerts and updates

-

provide management reporting and full transparency

Manual intervention would be required for manuscripts that did not pass through the system. However, the more the system is used, the more rules for automation and processing anomalies can be identified and applied, thus improving performance over time.

Workflow Details

While nine workflow steps initially sound simple and streamlined, the complexities in each step are considerable. Every manuscript is nearly as unique as the author who created it. Each step in the workflow enables subsequent actions. If an action fails in a workflow step, the system will route the manuscript for human intervention.

Receive

Upon peer review acceptance, Aries will invoke the DCL submission web service API and pass the payload containing the zip file for the article and also include a callback URL and job identifier to be used when DCL is ready to return the final results back to Aries.

This initial workflow step unpacks the files submitted and securely saves and registers the assets and corresponding metadata in DCL’s Production Control System (PCS). PCS provides workflow management and scheduling/reporting capabilities with comprehensive monitoring mechanisms that track timeliness and quality levels, generating alarms when requirements are at risk of not being met. It was developed and built specifically to handle multiple production sites and multiple workflows simultaneously and is time-tested to handle the volume and accuracy that would be needed to meet Aries requirements.

At this stage, the system will immediately determine if a document is seriously problematic such as being password-protected or in a source format other than Word. Future stages in the project are intended to also support PDF source files.

Upon successful completion of all these steps, including the triage, the workflow is initiated.

Pre-Process

The pre-process step normalizes the manuscript to enable subsequent autostyling. In some instances, normalization is straightforward, such as accepting all track change revisions. Other normalization tasks are complex such as using the MathType API to convert non-native math done using MathType or older versions of Word to MathML.

Pre-process is the first step at introducing industry standards to promote consistency in the content and convert it to the DCL Hub format, enabling connectors to other delivery formats such as XML, HTML, and XHTML.

Following are some of the individual actions taken in pre-processing:

-

Converting non-native math to MathML

-

Converting native Word math to MathML

-

Correcting malformed URLs

-

Normalizing lists

-

Accepting all track change revisions

-

Inserting page number bookmarks

-

Handling line breaks

-

Extracting and converting vector/raster images to PNG

-

Converting to HUB with the OASIS table model

-

Converting Word table properties to XHTML/CSS

-

Recognizing table headings

-

Representing tables as HTML

-

Handling inconsistent table columns, row separators

-

Normalizing fields such as symbols to XML style grammar

Autostyle

The autostyle step applies consistency and styling that further enables consistent conversion to XML meeting the Aries specification. A major component of this step deals with images, tables, and headings:

-

Link graphics and graphic captions, handling layered graphics, styling captions, accommodating page boundaries, and logging anomalies such as unconnected drawings

-

Identify and tag all the elements in front matter, body, and back matter

-

Autostyle based on the content structure – heading levels, lists

Graphics can be complex depending on how authors prepare and supply images. This stage ensures that for every graphic/image called out in text, there is a corresponding asset file as well as a caption for the graphic/image in the narrative text. In some cases, images might have multiple layers in the Word manuscript such as a grid with four panels labeled A,B,C,D. The autostyle process extracts each panel into a separate asset, and tags and structures it to align the figure callout with the correct asset file and associated metadata.

DCL employs a series of techniques to deploy the specific actions in this workflow step. spaCy, industrial-strength natural language processing in Python, is used to detect and autostyle author names and affiliations. A complex layering of business intelligence and configurable, custom algorithms are used to detect, structure, and autostyle the document sections and the elements within the content such as table captions, table citations, figure captions, figure citations, reference citations, appendix citations, and decompose and style the references.

Autostyling might seem straightforward, but the reality is that it needs to deal with many inconsistencies. For example, while we need to tag the reference section with the XML tag <ref>, authors often misspell “references.” And when citing references, the authors often misspell the author name, cite the wrong publication year, or use unmatchable reference subscript links.Information is often missing and must be inferred, such as needing to link author names and affiliations when there is no direct information, such as a subscript, to establish the relationship.

The system is designed to run through a series of common typos and repeated errors identified across a large sample content set. Business rules for autoconversion have been identified through natural language processing, and the system allows for ongoing inclusions of new rules as identified.

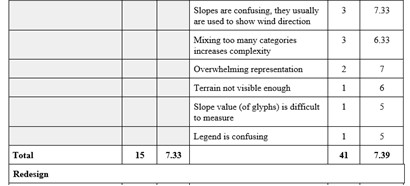

Table construction can, at times, be quite complex in these manuscripts. Specific font formats (italics, bold), shading in tables, and colors might all carry meaning that should not be lost. Every table must be tagged with attributes on each specific table element to denote the style represented in the source file.

For example, the author manuscript may be styled with basic table, table body, table rows, and table cells. Adding attributes on the table elements that detail stylistic choices, ensures that the manuscript is represented both physically and meaningfully with the author's intended choices:

Source:

XML Representation:

Conversion

While the conversion workflow may appear to be where the magic happens, it is an excellent illustration of how critical preceding steps are to the successful conversion to XML. Verifying autostyling actions and repairing anomalies is the first process.

The system processes and converts special characters such as currency symbols (£, ¢), legal symbols (©), mathematical symbols (<, √, °) to Unicode to ensure consistency in the content ingested by further downstream systems.

Bibliographic references and author affiliation linking are well known for myriad complexities. For example, references to content other than journals and books may be a challenge to interpret, the order of first names and last names may vary by region such as in Chinese where an author’s last name is listed first and the first name is listed last.

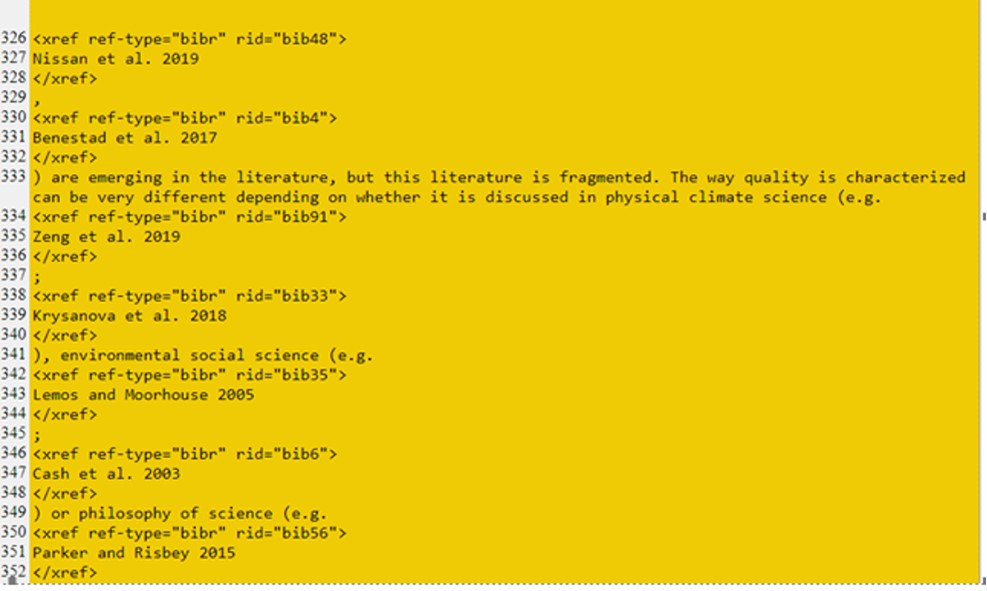

DCL decomposes bibliographic citations presented in straight text and applies detailed structure that allows for accurate search and discovery. It also detects and links bibliographic citations within an article to the corresponding bibliographic reference.

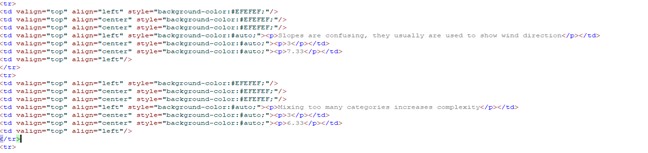

Following is an example of in-text bibliographic citations submitted in a manuscript:

The new system extracts the free-form bibliographic citation text and structures it within an <xref> tag to facilitate linking and provide clarity and structure.

Other items in the conversion stage include

-

Autostyling verification and repair

-

Author/affiliation linking

-

Special characters handling

-

Table footnotes

-

Retain and propagate table colors, shading, bolding

-

Bibliographic reference decomposition

-

Figure citations

-

Reference citations

-

Appendix citations

-

Conversion to the Aries version of the JATS 1.2 XML schema

-

Populating metadata

Along with the Word file, Aries provides an XML file with metadata about the article from Editorial Manager. Journal metadata that is added to the article XML as part of the conversion step includes ISSN, journal title, and other publisher information. Article metadata includes received/revised dates, volume and issue, funding information, and, when author names can be unambiguously matched, ORCIDs.

Confidence Analytics

The DCL Global Quality Control System (GQC) maintains XML-based cross-project and project-specific quality control checks that are configured and executed at various steps in the processing workflow to ensure consistently high-levels of quality.

Once the conversion is complete, an extensive set of automated QC checks are performed to assess the source anomalies and accuracy level of the resulting XML. The results of the checks are analyzed and weighed using DCL’s custom algorithms to provide a confidence factor and automated recommendation for manual review or straight-through processing for each document.

If the confidence factor indicates that manual review is required, the specific areas of the document to be reviewed and potentially repaired are identified and communicated to PCS to orchestrate the appropriate workflow steps.

Editorial

The editorial stage involves human intervention from both offshore and onshore resources. The combined onshore/offshore review supports time and budget concerns that are real factors when architecting a workflow that is in a near-constant state of movement.

The manual review is targeted and limited to the content elements that were highlighted for review and repair. Triage GQC is performed upon receipt of the updated XML to verify that only those identified elements were modified.

Quality Assurance

In the past, content quality review was a manual project, and the resources were simply not available or would often be outside of budget when considering the volume and scale publishers deal with today. DCL’s proficiency in automating QA checks decreases conversion turnaround time and vastly improves the quality of the final product. By applying a trifold approach of automated differencing, error analytics, and XML-based QC checks using GQC, DCL provides a model of continuous improvement that both allows the correction of issues prior to final delivery and proactively prevents those issues in the future.

Final Packaging

The Word-to-XML full-text conversion produces an XML file that is valid to JATS 1.2 and adheres to all XML style points detailed in Aries’ Full-Text XML Tagging Guidelines.

Final Quality Control checks are executed during packing such as verification that every image callout has a matching image and vice versa. Images are renamed as necessary, line breaks and unique identifiers are repaired as appropriate, and the final, verified files – XML and images – are zipped and prepared for delivery back to Aries.

Delivery to Aries

DCL’s PCS system calls the Aries API using the provided callback URL with the job identifier to notify Aries that a packet is ready for delivery---an API to deliver the packaged XML file back to Aries. Aries then invokes the DCL GetJob API and retrieves the results.

Additionally, a DCL daily reconciliation report confirms that all handshakes were successfully executed and that no files were dropped upon retrieval and return.

Conclusion

In the scholarly publishing workflow, good XML (not simply valid XML) is imperative for every person who works in an editorial and production capacity. Copyeditors, production editors, editorial directors, and ultimately authors benefit when content is structured early in the publishing workflow. The frictionless flow of content across a production workflow reduces frustrating delays and inaccuracies, resulting in faster turn-around times and improved quality of published scientific content.

Aries plans to move XML-centered workflows upstream from production to peer review as the system matures. In peer review, consistent quality of XML is even more vital, as it will be authors, instead of the production editors employed by the publisher, who will be the first to see the results.

While still in its early stages, the new XML-first workflow developed by Aries and DCL, reduces frustrating delays and inaccuracies and results in faster turn-around times and improved quality, better control and accessibility of key metadata, and most importantly, improved user experience for editors and production staff.