The Importance of Content Structure and XML in Managing Document Collections

Incorporating structure and identifying elements in a large document collection facilitates the discoverability of content as well as information interchange between systems and platforms. For digital content management and data exchange, XML has become the standard approach to store and transport data in human-readable and machine-readable format, and JATS has become a standard in scientific and technical publishing.

Dual readability by both humans and machines is critical for academic research, libraries, public archives (e.g., PubMed Central), indexes, hosting platforms, and vendors. A consistent and accurate XML structure, conforming to the latest JATS standard, is essential for maintaining the integrity and usability of content collections and getting the promised results.

Discoverability

Structured XML allows readers to find content more precisely by allowing for advanced searches beyond simple text queries. The structural cues in the content and the metadata enable precise searching. Well-formatted XML documents allow indexing tools and applications to effectively parse and better identify relevant data faster, which is particularly valuable in large datasets and digital libraries.

Content Interchange

Today, the same information is reused in many forms and is expected to transfer seamlessly between systems and platforms. Consistent and accurate XML structures are crucial for this content interchange. XML’s standardization allows disparate systems to communicate easily, ensuring that data retains its meaning and structure when transferred. Interoperability is fundamental for data sharing across organizational boundaries.

Accessibility

Accessibility to information for people with disabilities is important, and it is also often legally required. Accurate structuring and tagging of XML does much of the work to make content accessible and allows much more to be done. For example, images of tables and math that are not encoded are inaccessible to visually disabled users; XML provides the mechanisms needed to encode and structure tables and math so that they are readable by software readers to ensure they are accessible to those with visual and other disabilities.

Emerging and Future Applications

Content discoverability, interchange, and accessibility are well-established applications in the scholarly publishing arena; however, many new XML-dependent applications are currently in development, and we don’t know all the ones that are coming along. Applications in the wings include various AI applications as well, to meet the challenges of research integrity.

How Good Digital Repositories Go Awry

It stands to reason that an organized document collection is good and that those assembling XML document sets would take pains to keep them in good shape. So, where do things go wrong? Even in the most carefully curated collections, changes, errors, and discrepancies will accumulate and, over time, reduce the system’s efficiency.

-

In one large collection we worked on, it was revealed that 15% of articles in the collection had duplicate

IDs. -

An analysis of another collection, one that contained all published content from one journal, revealed errors on

<pub-date>s for 13% of the collection. -

Another publisher required updates to 73% of files for a seamless platform migration.

These percentages of errors and issues are not atypical. In fact, journal publishers are often surprised by the results of a deep analysis.

Changing Standards

Standards evolve. XML has been an industry standard in scholarly publishing since at least 2003, when the National Library of Medicine (NLM) introduced the NLM DTD Suite. [Rosenblum 2010] Focusing on the JATS standard alone, there have been seven core iterations since 2003 [Wikipedia], and several intermediate versions (Table I). That alone accounts for significant variation over time. Some of the earlier versions are not totally compatible with the current versions and are also missing desirable features that were implemented later.

Table I

Core iterations of the JATS standard since its inception.

|

NLM JATS, version 1 |

|

|

NLM JATS, version 2 |

|

|

NLM JATS, version 3 |

|

|

NISO JATS, version 1.0 |

|

|

NISO JATS, version 1.1 |

|

|

NISO JATS, version 1.2 |

|

|

NISO JATS, version 1.3 |

|

Interpretation of Standards and Errors in Execution

The various standard versions are complex, and not everyone is fully conversant with all the elements and attributes or even has the same understanding of how to interpret them. Because publishers often work with multiple parties and vendors to produce XML based on the standard at the time, variances in tagging interpretation and application, differences in the specifications, and human error all contribute to inconsistencies. Production errors, interpretations, and variations in production support can all lead to discrepancies in the XML files over decades of publishing.

Archival Content is Often Not Consistent with Current Content

Publishers with significant history have likely published content in various NLM/JATS

specification iterations. Yet few publishers do a systematic review of their content

to analyze and identify key metrics about their source files and corresponding digital

assets

(e.g., .jpg, .gif, .XLSX, .zip, .tif, .mov, etc.) to bring them up to a common standard; a variety of standards continue to

proliferate.

Some of these earlier materials may be following obsolete standards. Publishers may be missing out on important functionality by not updating to the current standard. Understanding what is in a publisher’s legacy archives is a critical first step.

A major factor for many publishers is that not all their content is in XML. Depending on the investment in publishing technology, legacy content could comprise PDFs, PDFs with XML header information, or XML files in an outdated DTD, along with dozens of other possibilities.

Structural Issues in JATS XML and Corresponding Files

An XML file can parse cleanly and be technically valid but still contain issues that impact interoperability or cause problems for the end user accessing the file.

When analyzing large content collections, often spanning decades, more often than not we encounter numerous problems that reduce the effectiveness of how organizations can use their content. The following are examples we’ve seen over the years.

Missing DOIs

Web hosting platforms such as Silverchair, HighWire, and Atypon use the Digital Object

Identifiers (DOI) as a unique identifier for the article or paper. If the DOI is missing

or not valid, the content may not load properly, or may not be loaded to the platform

at all. If the DOI is not unique, subsequent loads might overwrite earlier content

or cause other problems. Missing DOIs found at load time

can often delay the whole process.

Identifying these problems in advance provides time to fix the problem before the

crunch. Missing identifiers can be found with lookups against publishers’ records

or standard databases like Crossref. Or if the DOIs do not exist, they can be created.

But all this is best done earlier rather than at platform load time.

Cross-Reference Errors

Missing and incorrect cross-references (<xref>s) are common. Examples are image files that are

not

referred to in the text, or references in the text to images that are

not

found. Incorrect and empty <xref> tags must be resolved, or they will not properly render on hosting platforms and

will not find the items referred to.

Following are some examples in a recent analysis:

-

Incorrect

<xref>to"ref008"– Cross-references to something that does not exist. As a result, the XML would fail to parse in many platforms, and the file would fail to load onto a website. -

<xref>with no text:<xref ref-type="table" rid="fig"/>–<xref>s with the incorrect@ref-type, which requires correction for the link to work properly. This example shows that the reference is to a table, but the ID does not match because it points to a figure. -

<xref>with no text:<xref ref-type="fig" rid="F_EEMCS-04-2016-0059008"/>–<xref>s missing text that are either being used as placeholders for target objects to render or to represent a span. -

<xref>with no text:<xref ref-type="table" rid="tbl8"/>–<xref>s that exist for no discernible reason

Invalid Assets

Aside from the text, there are other objects (assets) like images, math, video, etc., which typically need to be incorporated into the displayed document. Requirements for assets vary across hosting platforms, which may have different file formats requirements, naming conventions, and other specifics.

Following are common examples of issues with assets. While these problems do not appear as XML errors, they demonstrate the necessity of analyzing archival and current content and the supporting files that make a journal article or book chapter complete:

-

Mismatch between reported image type 'TXT' and file extension 'EPUB'

-

File format error

-

Asset is size 0

-

Missing asset:

<graphic xlink:href="09526860010336984-0620130402008.tif"/>

Other Structural Problems

XML files can be valid and parse cleanly, and still contain structural issues that render the content not fully usable and findable.

Incomplete Metadata

-

A missing article title will cause load errors when loading content to a hosting platform.

-

A missing chapter title will cause content to render incorrectly and reduce accessibility to the visually disabled because the chapter heading will not be found correctly in a reading machine.

-

Invalid dates, such as dates missing a parameter or in an obsolete format, can cause load errors and warnings and will cause materials to be out of order and not be organized correctly.

Parsing Errors

Earlier materials prepared with older versions of DTDs might have been valid at one time but may not meet current requirements. When analyzing large content collections, we frequently need to identify and obtain earlier DTDs to parse and update the source content.

The following are common issues that may arise with tags and their attributes; these all happen to relate to errors in MathML, for which tagging might be particularly complex:

-

Attribute

@columnspacingis not allowed on element<mstyle> -

Attribute

@fontfamilyis not allowed on element<mi> -

Attribute

@otheris not allowed on element<mrow>

Volume/Issue/Page Publication Metadata Differences

Discrepancies that were not noticeable in earlier lower-functionality systems might cause problems when moving to a system with more sophisticated requirements. A common example would be the non-matching publication dates for articles within a journal issue. Since one would expect print publication dates (ppub) within an issue to be consistent, this condition might indicate an error.

When volume and issue information is incorrect or inconsistent, the article will not

live

in the right place in the database. It may not be found after loading or may cause

the creation of extraneous journal issues.

In any event this is the kind of information that would be brought to the attention of the client project manager, who can decide how to proceed. A typical approach would be to update the journal metadata at the article level to make sure it’s all uniform and to allow articles to be loaded independently when needed.

The following typical messages demonstrate issues that arise with problematic publication dates:

-

Different pub-date-ppub values in unit: "2011-02-22" (6 files), "2011-08-02" (19 files)

-

Different pub-date-ppub values in unit: "2020-01-02" (1 files), "2020-01-08" (1 files), "2020-03-20" (1 files), "2020-04-04" (2 files), "2020-04-09" (3 files), "2020-04-13" (1 files), "2020-04-27" (2 files), "2020-06-06" (1 files)

Unusual but True

Findings in JATS

Until a collection is deeply mined and analyzed, it’s hard to imagine the issues that might be found in a large collection spanning many decades. Just when we think we’ve seen it all, we find something new:

-

Bad dates such as all 9s or 0s for an

@iso-8601-date -

The brackets around tags given the hex Unicodes for the

less than

andgreater than

symbols (i.e., < and >) thus making the tagging visible in the rendering -

Special characters given both the UTF and the hex Unicode following each other

-

DOCTYPEmissing from the JATS XML file and thus not able to render

Comprehensive Content Cleanup Process

Working at scale on cleaning up XML quality and compatibility in a large collection requires a methodology and automated tools.

Manual error detection in XML documents is time-consuming and error prone. DCL’s approach has been to use extensive automation and AI to identify and highlight structural problems. An automated large-scale analysis exposes data corruption, identifies errors that will prevent interoperability, and maintains the quality standards of valuable assets.

Identifying What You’ve Got

The first step sounds simple, but sometimes isn’t – identify all your content and

make sure it’s available to analyze. The publisher’s collection, organized in hierarchical

folders and files, undergoes an initial process phase to organize the content into

units,

extract relevant metadata from the files, and verify completeness. A unit

might be an article, a journal issue, a manuscript, conference proceedings, or anything

else. For books, either each chapter or an entire book might be considered a unit.

This processing phase also inventories digital assets such as .jpg, .gif, .XLSX, .r, .JSON, and other file types. The technologies used include DOM, XPath, regular expressions,

custom algorithms, Exif tools, PDF tools, and Ghostscript.

Architecting the Process

Because of the sheer amount of content that might need to be audited, often a million pages or more, it’s important that software is architected to be multithreaded, restartable, and incremental.

-

Multithreading enables simultaneous execution of various parts of a program, allowing many parts of the data collection to be processed in parallel.

-

A restartable application allows software to resume from the point of interruption after system downtime or failure. It can be frustrating to have to restart from the beginning after twelve hours of processing.

-

Incremental processing minimizes overall processing demands by handling only new data partitions added to an already processed content set rather than reprocessing the entire content collection.

XML Parsing and Validation

The next phase involves health checks on digital assets including validating XML files. Software performs semantic checks on XML files at the unit level, journal title level, and across the collection. Additionally, the relationships between the XML files and digital assets are analyzed and verified.

Meeting Business Rules: DCL’s Global QC Approach

Aside from the technical details defined by the DTD or Schema, there are other publisher- or journal-specific rules on content that organizations should check to ensure the content base is consistent across the collection. We call those business rules; these are rules that apply to a particular business or situation.

Following are just a few examples from DCL clients to illustrate the kinds of rules that might be applied:

-

Figure and table labels should not have end punctuation:

<label>Table 1</label> -

Inside a table cell the element for a graphic should be

<inline-graphic>rather than<graphic> -

<article-title>should not be in all caps

Business rules can vary from client to client and even between one project and another, thus architecting a configurable system that defines and analyzes these rules across large content sets is essential.

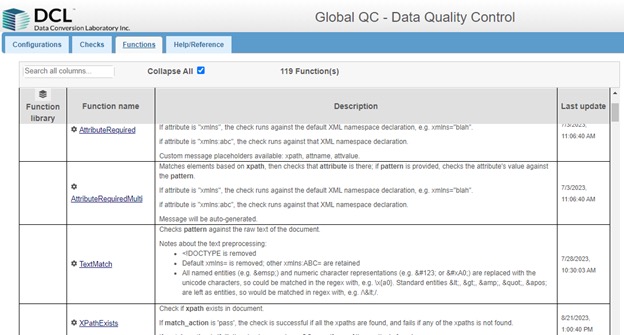

DCL’s Global QC is a platform to facilitate defining business rules without programming and applying them across a large corpus (Figure 1). Quality checks can be performed on individual XML files or throughout the corpus. The checks can be restricted to DTDs or XML schemas, configured to exclude/include specific nodes within the content, be conditional based on business scenarios, and easily be grouped together into sets for consistent handling. We’ve also built an intuitive user interface to create, modify, and delete verification checks and apply them to the content to address common scenarios and data anomalies. Each individual or related set of checks may be assigned severity levels and customized error messaging to enable efficient downstream error handling.

Figure 1: Global QC by DCL is a configurable tool to validate large XML collections.

Content Structure and New Challenges

In addition to the benefits already discussed, improved content structure is essential for addressing the industry’s latest challenges. The creation and dissemination of scholarly content is accelerating at an unprecedented rate, bringing with it a host of new obstacles to overcome. Effective content structuring will be crucial in navigating and managing this explosive growth and solving the challenges that come with it.

Seamless Interchange

Quality XML is crucial for content access, interpretation, and exchange. If publishers routinely analyzed their collections, the anomalies, errors, and issues that have been lurking might have been revealed earlier, which would have already improved interchange and discoverability. This part is not new; what is new is that we don’t know all the places we want content to go or all the new ways we will want to interchange. Having content rigorously standardized ensures that you will be ready for what comes next.

Research Integrity

Scholarly publishing is struggling with problematic papers getting into publishing workflows via papermills and citation cartels. XML is increasingly used to effectively identify potential issues related to research integrity by making it easier to verify the accuracy of references and author names and affiliations with third-party databases (e.g., Crossref, Scopus, Web of Science, CCC, ORCID).

XML tagging and metadata provide a structured framework for analyzing journal articles at scale, enabling publishers to effectively identify potential issues related to research integrity. DCL is building a library of automated research integrity checks to flag and report suspected issues at scale. Some integrity checks involve traditional XML analysis and validation, but other tools are also used.

Adjacent technology, tools, and processes invoked for XML analysis, such as entity extraction, machine learning, and natural language processing, are employed for automated analysis, data mining, and reporting across an entire collection. Some integrity checks we’ve been applying or studying include

-

Author Names and Affiliations: Tag and verify author identification against third-party databases (CCC, ORCID, etc.).

-

References and Citations: Tag and verify references and citations with external databases to verify accuracy (Crossref, Scopus, Web of Science, etc.).

-

Tortured Phrase Analysis: Analyze unstructured and structured content for tortured phrases or inconsistencies for closer scrutiny. Tortured phrases manifest as words that are translated from English into a foreign language and then back to English and are often an indication of paper mills being employed. A classic example is the phrase

counterfeit consciousness

instead ofartificial intelligence.

-

iThenticate Similarity Score: Process large volumes of iThenticate reports and combine with publisher-specific business rules to report potential plagiarism.

-

Image Forensics: Apply digital forensics to identify potential plagiarized or manipulated images.

AI Initiatives

The rapid growth of AI in the research community has given new additional importance to structured content. While AI development is still in its infancy, it’s becoming clear that AI systems will produce better answers when it gets more clues that well-structured data and metadata provide. Adopting a consistent and accurate XML structure ensures that organizations will have content that will be AI-ready and helps preserve the value of their content assets. This will become additionally important when combining RAG (Retrieval-Augmented Generation) with structured content in an LLM (large language model) to improve the accuracy, timeliness, and factual grounding of LLM output.

Structured content provides potential advantages for finetuning an LLM such as OpenAI’s GPT model to refine its knowledge based on a specific domain. [Abbey 2024] By training on verified and structured data, the AI model can better parse and process information and ultimately minimize hallucinations.

Structured content has many potential advantages over unstructured content when it comes to training and finetuning language models:

-

Contextual understanding: structured content metadata provides valuable context and deeper semantic meaning for a language model.

-

Easier data processing: the DTD improves how the algorithm parses, processes, and understands content. This leads to efficient training and better results than with unstructured content. [Van Vulpen 2023]

-

Improved accuracy: structured content is organized and follows a consistent hierarchy that reduces the ambiguity and noise in content. This improves LLM performance, as a model can easily identify patterns and relationships in content.

Navigating Toward the Future

The future depends on what you do today.

— Mahatma Gandhi

Scholarly journal and book publishers are in the unique position of publishing groundbreaking news while also preserving the record of the scientific method in its true form. Legacy journal and book content represent a snapshot in time of knowledge, methods, and discoveries that have shaped our understanding of the world.

As the pace of scholarly publishing accelerates and the number of published materials grows, maintaining the integrity and accessibility of both current and legacy content is more critical than ever. Publishers must ensure that information is preserved accurately and remains easily accessible to researchers, scholars, and the public, allowing future generations to build upon these foundations of past research and knowledge. This dual responsibility of disseminating new findings while safeguarding historical content highlights the importance of robust content management, industry standards, technologies, and practices in the scholarly publishing industry.

References

[Rosenblum 2010]

Rosenblum, A. (2010) NLM Journal Publishing DTD Flexibility: How and Why Applications of the NLM DTD Vary

Based on Publisher-Specific Requirements.

Journal Article Tag Suite Conference (JATS-Con) Proceedings 2010.

[Wikipedia]

Wikipedia contributors. (2024, June 3). Journal Article Tag Suite.

In Wikipedia, The Free Encyclopedia. Retrieved 17:41, June 25, 2024, from

https://en.wikipedia.org/w/index.php?title=Journal_Article_Tag_Suite&oldid=1227032206

[Abbey 2024]

Abbey, A. (2024, March 26). Structured content: A secret weapon for finetuning AI.

Retrieved, June 29, 2024, from

https://www.rws.com/content-management/blog/structured-content-a-secret-weapon-for-finetuning-ai/

[Van Vulpen 2023]

Van Vulpen, M. (2023, April 25). Unlocking the Secrets of GPT: How Structured Content Powers Next-Level Language Models.

Retrieved June 29, 2024, from

https://www.fontoxml.com/blog/unlocking-the-secrets-of-gpt-how-structured-content-powers-next-level-language-models/