Introduction

In the digital humanities, we encode texts for three primary reasons:

-

to help ensure longevity of the digital object, at least in internet time

-

to help us analyze or explore the text in a manner congruent with our research or teaching

-

to help us share the text with others, whether for reading, similar analysis, or for analysis we might not have thought of or even imagined (and whether delivered in digital form or in print; and whether for profit, professional accreditation, or altruistic reasons)[1]

The first goal (longevity) can be met by using a very common, well-defined, open standard character encoding (i.e. Unicode), and by sticking to human-readable, well-defined, open standard text formats (e.g. TeX or XML). That is, by avoiding any proprietary or binary formats.[2]

The second goal (analysis) can be met by sticking to what we used to call “descriptive markup”MarkFut but is now better described as “indicative logical markup”Flaw, and developing a sufficiently detailed description of your data for the purposes envisioned. That is, to develop a markup system that separates out the parts of the document you find interesting and describes that which is interesting about them in sufficient detail.

The third goal (sharing) can only be met if the others you are sharing with understand that which you are sharing. It does you no good whatsoever for me to send you a manuscript transcription in a proprietary binary format which your computer cannot read, and not much good to send it in an open text format you do not understand. In order for you to make use of the transcription, we need to have a shared understanding of both the operating details and semantics of the markup language used.

However, because the details of humanities texts that scholars wish to engage with and the assertions scholars wish to make about these texts are (almost definitionally, I daresay) so widely varied, no single markup language could possibly represent them all. That is, no markup language can meet the second goal for all scholars simultaneously. A given markup language can represent some of the analytic needs of all of the scholars, and all of the analytic needs of some of the scholars, but it can not represent all the analytic needs of all of the scholars. This holds whether the markup language is a widely used standard language like TEI, or a home-grown language specific to the purpose.

Even if the third goal were about sharing data with a particular individual, a shared language would be required — in order for a language to be shared, both the sender and the receiver, regardless of discipline, whether they know each other or not, or whether they can even talk to each other or not, must agree on it, even if only implicitly. But in fact the third goal is about sharing data in a collaborative fashion with almost arbitrary interested parties. In this case, an agreed upon and widely known markup language is required.

The TEI Guidelines try to be that language. The full title of the impressive and ubiquitous guidelines is Guidelines for Electronic Text Encoding and Interchange (emphasis provided). But with the advent of TEI P5, the focus, I claim, has been more on interoperability than on interchange. This paper will explore the meaning of the two terms, particularly with respect to the aforementioned goals of text encoding, and the impact of trying to achieve interchangeability or interoperability on encoding languages (TEI in particular) and thus the communities served.

Definitions

Standard dictionary definitions of these terms do not provide a particularly useful

perch.

E.g. the online edition of the Oxford English Dictionary definition of

interchange (as a noun) includes the concept of reciprocity: The

act of exchanging reciprocally; giving and receiving with reciprocity; reciprocal

exchange

(of commodities, courtesies, ideas, etc.) between two persons or parties.

oeinch. Merriam-Webster’s definition of the verb

interchange is more succinct, but still has at its core the idea of

reciprocity, i.e. of swapping places: to put each of (two things) in the place of the

other

mwinch.

The Guidelines do not formally define the term, but the meaning can be gleaned from

the

operational definition provided: The TEI format may simply be used as an interchange

format, permitting projects to share resources even when their local encoding schemes

differ.

http://www.tei-c.org/release/doc/tei-p5-doc/en/html/AB.html#ABAPP2.

As for interoperable, the OED is quite succinct, but somewhat

unhelpful: Able to operate in conjunction.

oeinop Merriam-Webster is mildly helpful, although it is not clear whether

their systems include humanities encoding systems: ability of a

system (as a weapons system) to work with or use the parts or equipment of another

system

mwinop.

The Guidelines occasionally use the word interoperability (4 times),

but never define it. I would like to interpret the use of interchange and

interoperability in the Guidelines as implying that they are different:

As different sorts of customization have different implications for the interchange

and interoperability of TEI documents, …

http://www.tei-c.org/release/doc/tei-p5-doc/en/html/USE.html#CFOD.

However, it is possible that the author of that sentence thinks of interchange

and interoperability

as synonyms, and is just trying to be emphatic or

completely clear.

But moreover, these are not the definitions the designers of TEI (and perhaps other data interchange systems) had in mind when they originally discussed interchange, whether negotiated or blind. E.g., back when TEI P1 was released (1990-07), many of the concerns about interchange were concerns about just getting the data from one machine to another with the same characters. Only then could you worry about the syntax of the encoding system and the semantics of the particular encoding language.

It is no small accomplishment that today character fidelitous interchange is easy. It has taken the advent of more standardized character encoding (i.e., ISO 10646 and Unicode), more widely and uniformly supported transport protocols (e.g., FTP and HTTP), standard encoding schemes to work-around 7-bit mail gateways (e.g., base64 encoding), and standard ways to indicate content type (e.g. MIME), for us to be able to transport files from one place to another with such ease that we barely think about it. These great strides in computer technology allow us to take faithful transmission of a series of characters for granted; thus I will not consider it further in this paper.[3] Rather here I consider these terms in light of the syntax and semantics of the documents in question, rather than in the operational details of how to move the documents from here to there.

So, for purposes of this discussion of the transfer of encoded information from one party to another, I’d like to present operational definitions of these terms as follows.[4]

|

negotiated interchange |

you want my data; we chat on the phone or exchange e-mail and talk about the format my data is in, and that which your processor needs; eventually you have the information you need, and I send you my data; last, you either change my data to suit your system or change your system to suit my data as needed. Both human communication and human intervention are required. |

|

blind interchange |

you want my data; you go to my website or load my CD and download or copy both the data of interest and any associated files (e.g., documentation or specifications like a TEI ODD, a METS profile, or the Balisage tag library); based on your knowledge of my data that comes from either the documents themselves or from the associated files (or both), you either change my data to suit your system or change your system to suit my data as needed. Human intervention, but not direct communication, is required. |

|

interoperation |

you want my data; you go to my website or load my CD and download or copy the data of interest; you plug that data into your system. Little or no human intervention is required, and certainly no direct communication. Interoperability is not symmetrical. For example, this paper conforms to the

Balisage Conference Paper Vocabulary. If I plug it

into a generic DocBook processor, |

So an interoperable text is one that does not require any direct human intervention in order to prepare it to be used by a computer process other than the one(s) for which it was created. There are two caveats worth mentioning, here.

-

Merely for the sake of simplicity, certain

lame

interventions like changing the path in the SYSTEM identifier in the DOCTYPE declaration may be exempted. That is, I am not here going to consider how much human intervention is required to disqualify a text from being interoperational. I am not suggesting that there is no line, only that it is a matter for future work and consideration.Note that I am, however, precluding the possibility that any human intervention disqualifies a text from being interoperational. E.g., I would not consider a human issuing the command needed to ask a given process to operate on a particular text human intervention for this purpose.

-

In many cases of document exchange, the recipient of a file will write a program such that future iterations of the same process do not require human intervention. In this case, the target process has changed from P to P′, such that the document, which can be considered interchangeable with respect to P, is interoperational with respect to P′.

Variants of Interoperability

Using these definitions of interoperability, of course, means that whether or not

a

document can be considered interoperational depends very much on the process with

which we

would like to interoperate. For simple enough processes, every XML file

is interoperational. That is, any XML file will operate with the process. Consider,

e.g., the

process that counts elements in a file (count(//*)): no matter what

non-conformant, arbitrarily invalid, or silly TEI documents you send this process,

no human

intervention will ever be needed.[5]

But there are cases toward the other end of the spectrum, as well. It is hard to imagine

a

process that could read in an arbitrary conformant TEI file and typeset a book matching

the

publisher’s house style from it. Take, for example, just the case of getting headings

into the

proper case. If house style is that headings should be title-cased with acronyms in

small

caps, some of the following perfectly reasonable, valid, conformant TEI

<head> elements will be very hard to process properly.

-

<head>Surgery via LASER: right for you?</head> -

<head>Surgery via LASER: Right for You?</head> -

<head>SURGERY VIA LASER: RIGHT FOR YOU?</head> -

<head>Surgery via <abbr>LASER</abbr>: right for you?</head> -

<head>Surgery via <choice><abbr>LASER</abbr><expan>Light Amplification by Stimulated Emission of Radiation</expan></choice>: right for you?</head> -

<head>Surgery via <choice><abbr>LASER</abbr><expan>light amplification by stimulated emission of radiation</expan></choice>: right for you?</head> -

<head type="main">Surgery via LASER</head><head type="sub">right for you?</head> -

<head type="main">Surgery via LASER</head> <head type="sub">right for you?</head> -

<head type="main" rend="post(: )">Surgery via LASER</head><head type="sub" rend="break(no)">right for you?</head> -

<head type="main" rend=":after{content(: )}">Surgery via LASER</head><head type="sub">right for you?</head>

The point here is that being interoperable is not a static, independent feature of a document. Being written in French, being a standalone XML document, these are static, independent features. But being interoperational depends entirely on which application you are trying to interoperate with. That is, interoperationality is very context dependent.

Interoperability is Difficult

Many XML user communities will at least bristle at my assertion that interoperability

is

difficult, and some people will even object, using their own projects as counter-examples.

For

example, it may be quite easy to take documents in SVG or Triple-S and use them in an application other than the one for which they were

originally written. But I hold that interoperationality is not just about use of XML

data in

current predicted applications (I will want to use this file in an SVG viewer

),

but rather is also concerned with the use of data with both unintended applications

(e.g.,

linguistic analysis of survey questions) and future applications (e.g., submitting

a set of

vector graphics images to software that makes a mosaic of them).

Notice that I am being (deliberately) self-contradictory, here. I am suggesting that interoperability is always contextual, that we need to know what application we are trying to operate with in order to measure our success at interoperationality; and simultaneously that in order to be considered interoperational, a document should work with applications that are not only unforeseen, but haven’t even been written yet. If the application has not been written yet, it is obviously impossible to know whether or not our data will work with it. It is this very contradiction that drives me to say that interoperationality is hard. We cannot know the various contexts in which we would like our files achieve interoperationality.

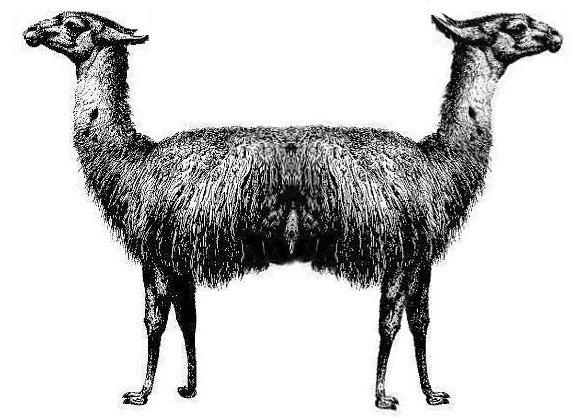

Figure 2: Pushmi-Pullyu

One take on the Pushmi-Pullyu. A somewhat more authentic take (IMHO) as available as a video on YouTube

This contradiction does not necessarily make interoperationality difficult for a language like SVG, which has both very tight operational (as opposed to declarative) semantics and a sharp focus on particular kinds of processing. But TEI is a declarative language that is intended to record, for various disparate purposes, an enormously wide array of texts (to wit, those of interest to humanities scholars). Thus its semantics are necessarily somewhat looser, its ambitions are sky-high, and its field of view is very wide.

For example, consider an application (present or future) that for some purpose wishes

to

process the authorial words in the main content of a TEI-encoded document; that is,

we need to

parse out the words, but ignore things like metadata, running heads, editorial notes,

chapter

titles, etc. Many TEI projects have had reason to extract the words

in this

way, and have found it not particularly difficult to do. But doing so for a generic TEI file turns out to be impossible, and many people find

this surprising. (Remember that TEI, like many languages, is really a framework for

building a

particular markup language with many, but not all, features in common with other TEI

languages.)

Before demonstrating that this is impossible, I will first elaborate on one of the

reasons

it is hard: end-of-line hyphenation. In order to parse words out from the input document,

we

need to know whether a hyphen at the end-of-line is hard or soft. In many cases this

is

obvious to a human (pro-tection

vs. pro-choice

), but in some it

is not at all clear (e.g., Sea-mark

CeHx), and it is generally

harder for computers than for humans. The TEI currently (P5 v. 1.9.1) provides at

least five

different methods for encoding soft hyphens at end-of-line, three of which might use

different

characters for the representation of the hyphen itself (which is displayed as a hyphen

(U+002D) in the listing below), and some previous releases of TEI allowed even more.

This may

strike some as silly or even excessive, but in most cases these choices make sense.

For

example, the TEI cannot prescribe to scholars who are not remotely interested in original

lineation or soft hyphens that they record these features.

pro-

tection

protection

pro<lb break="no"/>tection

pro-<lb/>tection

pro-<lb break="no"/>tection

The multitude of encoding methods for soft hyphens at end-of-line would not be a problem if an application could easily query the document and ascertain which encoding was used. The good news is that TEI does actually provide a specific construct[6] for encoding how end-of-line hyphens are handled. The bad news is that the vocabulary available to describe the different encodings is insufficient to the task; furthermore, it is optional, and many, if not most, projects do not use it.

End-of-line hyphenation is but one of many problems that make extracting just the

words

from generic TEI files difficult. Some, however, actually make it impossible.

For example, in TEI annotations or notes are recorded in a <note> element,

whether the note is an aside, an annotation, a footnote, marginalia, a textual note,

a comment

to the encoder’s manager, or whathaveyou. The TEI provides a @type attribute for

differentiating these different kinds of a note

; but since there is no

pre-determined controlled vocabulary for this attribute (rather, projects are expected

to come

up with their own vocabularies), and furthermore the attribute is not required, generic

TEI

processing software cannot really do much with it (at least not without human intervention).

Thus while it is easy for any given project (which knows how annotation vs. textual

notes were

encoded) to differentiate, it is impossible for general-purpose TEI software to do

so.

Interchange is (not) Difficult

Some might think that, on the surface, interchange is harder than interoperation.

After

all, it requires human intervention. And certainly dropping a file into a system and

presto

it works is far easier than doing work to get your system to process

the file as desired. But we’re talking here about the effort needed to make a file

that is

interoperable vs. interchangeable. As I’ve just described, for many purposes it will

be

impossible to process a random TEI file with a given system without intervention.

Thus however

difficult it is to make interchangeable files, it is easier than making interoperable

ones.

The bad news is that it can take quite a bit of work to make a file easily interchangeable with generic future processes. The good news is that the effort put into making it interchangeable does double-duty, and simultaneously increases the data’s longevity by making curation of the file easier. (After all, porting a file forward to a different platform of some sort is a process, and we can think of the file as being interchangeable with that process.)

The reason it takes work to make a file interchangeable is because (in general) it

requires that the semantics, not just the syntax, of the encoding be explained to

the humans

involved with consuming the file. In order to know which <note> elements

should be ignored, our prospective word-counter needs to know what the various possible

values

of the @type attribute mean. Yes, one can imagine a system in which the values

are inherently obvious to one who speaks the natural language which the values (which

are

arbitrary NCNames) are designed to mimic ("authorial",

"transcriptional", etc.), but this is not the general case, and moreover there

is no guarantee that the future consumer of the file speaks that natural language

or uses

those terms the same way.

Thus contemporaneously generated detailed prose documentation of the indicative logical markup constructs used to mark up a text can significantly facilitate the use of the text in future systems and processes, both those we can predict and those we cannot, both by those that generated the text and by unforeseen recipients of it. Put more succinctly, providing descriptions of the descriptive markup can make future interchange of a document possible where it might have been impossible, or easy where it might have been hard.

Interoperability vs. Expression

Inequality will exist as long as liberty exists. It unavoidably results from that very liberty itself.

— Alexander Hamilton GM

It is an old chestnut of political theory that liberty and equality are at least at odds with one another, if not completely incompatible societal goals.[7] Any economic system, social engineering, regulation, or legislation that enforces equality will limit liberty, and any that encourage liberty will make equality less likely to obtain.

Liberty in general, and freedom of thought, speech, and the press in particular, drive both individuals and (more importantly) society to improve.

It is not by wearing down into uniformity all that is individual in themselves, but by cultivating it and calling it forth, within the limits imposed by the rights and interests of others, that human beings become a noble and beautiful object of contemplation

— John Stuart Mill OL

marketplace of ideaseventually the true and good win out.

That said, there are societal advantages to equality as well. A pure communist society eliminates not only relative poverty and hunger, but also alienation and class oppression.

Likewise (I claim), interoperability and expressiveness are competing goals constantly in tension with each other. While the analogy is far from exact, interoperationality is akin to equality, and expressiveness is akin to liberty. And the very advantages ascribed to political liberty in the social sphere can be ascribed to the liberty of expressiveness in the encoding of humanistic texts. The free expression of scholarly ideas embodied in the encoding of a text puts our encoding systems to the test, and helps to improve them.

Those areas of an encoding system that are designed to make the resulting documents

more

equal to all the other documents that will be used in the target process and thus

more

interoperational are going to curtail the encoder’s liberty to be expressive. Those

areas of

an encoding system that are designed to permit the liberty of expressiveness (e.g.,

the

@type attribute of <tei:note>) are going to permit documents

that are less like (i.e., less equal to) the other documents intended to be consumed

by the

target process, and thus less interoperational.

Said another way, to make her document (more) interoperational, an encoder either

needs to

know the application semantics of interest ahead of time, or needs to stick rigidly

to

prescriptions (sometimes, but not always, expressed as schemas) that she believes

the target

application (which may not be known) will be able to understand. But rigidly sticking

to the

encoding that (she thinks that) the target application needs, or encoding to the

stylesheet

robs the encoder of the ability to be expressive in ways that (she

believes) might not be correctly handled by the target application. Common sense says

this

could result in, and my experience says this often does result in, encoder’s deliberately

using encodings that are substandard, less faithful to the document, or outright incorrect

per

the encoding language, in order to achieve the desired

results.

In the general case, interoperationality (at least of random TEI-encoded files) is impossible. But that does not stop encoders from striving for it, and in so doing curtailing their own ability to express the features of interest to them in individual ways. While this is not always a bad thing, differing expressions of content are part of the force driving scholarship forward and cannot be entirely forsaken for the short-term goal of avoiding the work needed for interchange as opposed to interoperation.

Expression and Interchange

Freedom is nothing else but a chance to be better

— Albert Camus

For humanistic scholarship variation of expression is a necessity. Ask a room full of scholarly editors to examine a document in their field, and you will have as many interpretations (i.e., encodings) of the document as there are editors. And no matter how expressive your underlying language is, if you ask enough scholars you will come across cases where the interpretation of the document cannot be straightforwardly expressed in the standard language. Additional expressiveness, a deviation from the standard, will be required.

Just making such deviations silently is a recipe for disaster. Consumers of the document will presume that it adheres to the standard and will find the more expressive bits either uninterpretable or, worse, will interpret them incorrectly.

But carefully documenting such deviations has multiple benefits:

-

the process of creating the documentation focuses the encoder on the essentials of the interpretation

-

the documentation can help push the standard in a certain direction, or be used by others with similar needs to avoid re-inventing a wheel

-

the documentation will help future consumers of the document avoid incorrect interpretations

-

the documentation will help future custodians of the data to curate it

Conclusion

Order without liberty and liberty without order are equally destructive.

— Theodore Roosevelt

-

Whereas an attempt to make a document interoperational with a particular process or application is likely to result in

tag abuse

; -

Whereas an attempt to make a document interoperational with any (or an array of) unforeseen and future processes or applications is likely to result in decreased expressiveness (perhaps to the point of defeating the initial purpose of the encoding);

-

Whereas relying on real-time or near real-time human-to-human communication to relay the information required in the exchange of (non-interoperational) documents can be inefficient and limits the potential set of document recipients to those with the capability to communicate with the encoder;

-

And whereas generating sufficient formal and natural language documentation to make blind interchange at least possible if not easy is useful to the current encoders writing the documentation, to current and future recipients of the encoded documents, and to future curators of the documents;

-

Let us hereby decree our common goal of supporting blind interchange over mindless interoperability and chatty negotiated interchange.

In summary, since interoperationality (equality) is often bought at the expense of expressivity (liberty), interoperability is the wrong goal for scholarly humanities text encoding. Practitioners of this activity should aim for blind interchange. This still requires a lot of adherence to standards (e.g., TEI), but eschews mindless adherence that curtails expression of our ideas about our texts.

Acknowledgments

I would like to thank Paul Caton, C. Michael Sperberg-McQueen, and Kathryn Tomasek for their assistance.

References

[tri] Baer, Susanne. “Dignity, liberty, equality: A fundamental rights triangle of constitutionalism” in University of Toronto Law Journal, 59:4, Fall 2009, pp. 417-468. E-ISSN: 1710-1174; Print ISSN: 0042-0220. http://muse.jhu.edu/journals/tlj/summary/v059/59.4.baer.html.

[TEI P5] Burnard, Lou and Syd Bauman, eds. TEI P5: Guidelines for Electronic Text Encoding and Interchange. Version 1.9.1, 2011-05-05. TEI Consortium. http://www.tei-c.org/release/doc/tei-p5-doc/en/html/index-toc.html (2011-05-08.)

[CeHx] Cary, Elizabeth (Tanfield), Viscountess Falkland. The History of the Life, Reign, and Death of Edward II, 1680; p. 12. Women Writers Project first electronic edition, 2002. http://textbase.wwp.brown.edu/WWO/php/wAll.php?doc=cary-e.history.html#ipn12.

[MarkFut] Coombs, James, Allen Renear, Steve DeRose. “Markup Systems and the Future of Scholarly Text Processing” in Communications of the ACM, 30 (November 1987); 933–47. doi:https://doi.org/10.1145/32206.32209.

[oeinch]

interchange, n.

. OED Online. Nov 2008. Oxford University

Press. http://www.oed.com/view/Entry/97600?rskey=O5hSGe&result=1

(accessed 2008-11-14, available only to subscribers).

[mwinch]

interchange, v.

. Merriam-Webster Online Dictionary. Nov

2008. Merriam-Webster, Incorporated. http://www.merriam-webster.com/dictionary/interchange (accessed

2008-11-14).

[oeinop]

interoperable, adj.

. OED Online. Nov 2008. Oxford

University Press. http://www.oed.com/viewdictionaryentry/Entry/98113

(accessed 2008-11-14, available only to subscribers).

[mwinop]

interoperability, n.

. Merriam-Webster Online Dictionary.

Nov 2008. Merriam-Webster, Incorporated. http://www.merriam-webster.com/dictionary/interoperability (accessed

2008-11-14).

[OL] Mill, John Stuart. On Liberty.

[GM] Pittman, R. Carter. “George Mason The Architect of American Liberty”. http://rcarterpittman.org/essays/Mason/George_Mason-Architect_of_American_Liberty.html, accessed 2011-07-10.

[Flaw] Renear, Allen. “The Descriptive/Procedural Distinction is Flawed”. Presented at Extreme Markup Languages 2000, Montréal, Canada, 2000-08-16T14:00. In Conference Proceedings: Extreme Markup Languages 2000, Graphic Communications Association; 215–218.

[SVG] Scalable Vector Graphics (SVG) 1.1 Specification, The World Wide Web Consortium. http://www.w3.org/TR/2003/REC-SVG11-20030114/.

[1 to ∞] Seaman, David. “TEI in your palm”. Presented at the TEI Members Meeting 2001, Pisa, Italy, 2001-11-16T11:30.

[LaE] Tibor R. Machan, ed. Liberty and Equality. ISBN: 978-0-8179-2862-9.

[Triple-S] Triple-S: The Survey Interchange Standard, version 2.0, Association for Survey Computing, 2006-12. http://www.triple-s.org/_vti_bin/shtml.dll/sssxmlfm.htm.

[WProc] Walsh, Norman. “Investigating the streamability of XProc pipelines.” Presented at Balisage: The Markup Conference 2009, Montréal, Canada, 2009-08-11/14. In Proceedings of Balisage: The Markup Conference 2009. Balisage Series on Markup Technologies, vol. 3 (2009). doi:https://doi.org/10.4242/BalisageVol3.Walsh01

[1] Note that a very strong fourth reason is to be able to achieve multiple

instances of goals 2 & 3 from the same source — the build once,

use many

1 to ∞ principle.

[2] Actually, I suppose avoiding proprietary binary formats is the double negation of using open standard text-based formats.

[3] Although it is worth mentioning that this interchange is easy only for those who have no need to use characters that are not (yet?) in Unicode.

[4] In a previous version of this paper I coined an additional term, interfunction, for one-way interoperability. I chose that term in order to evoke both the idea that something (in this case a text document) works, or functions, across some boundary; and the fact that in mathematics as well as computer science a function is not (in general) commutative:

Equation (a)

Equation (b)

[5] Yes, that may be too trivial for words. But in a 2009 talkWProc Norm Walsh presented the results of a study counting which steps were used in XProc pipelines. He didn’t care what the pipelines did, only what steps (i.e., XML element types) they used. For his purposes, any valid XProc document was interoperational.