Introduction

The goal of this report is to offer a contextualized introduction of the alignment ribbon, a new visualization of textual collation information, implemented in SVG. The three main sections, sandwiched between this introduction and a conclusion, are:

-

About textual collation. Textual scholars often align moments of agreement and moments of variation across manuscript witnesses in order to explore and understand how those relationships contribute to a theory of the text, that is, to understanding the history of the transmission of a work. The first major section of the present report provides an overview of collation theory and practice, which is the research context that motivated the development of our new visualization and its implementation in SVG.

-

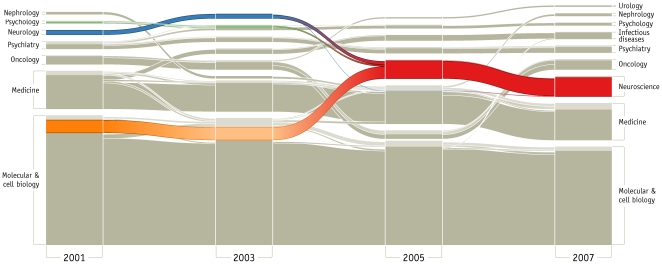

Modeling and visualizing alignment. Textual collation is a research method that supports the study of textual transmission. Collation is simultaneously an analytical task (identifying the alignments that facilitate an insightful comparison of manuscript witnesses), a modeling task (interpreting those alignments in a way that makes a theory of the transmission history accessible for further study), and a visualization task (translating the abstract model into a representation that communicates the meaning to human readers). Textual collation is always about text, but visualizations can communicate features of the model through a combination of textual and graphic features. The second major section of this report surveys influential visualization methods used in textual collation with the goal of identifying their relative strengths and weaknesses. In particular, we provide a thorough history of the development of the variant graph, which has played a singularly prominent role in collation theory and practice. The identification of the strengths and weaknesses of different visualizations matters because it motivates and informs the development of our original alignment ribbon visualization.

-

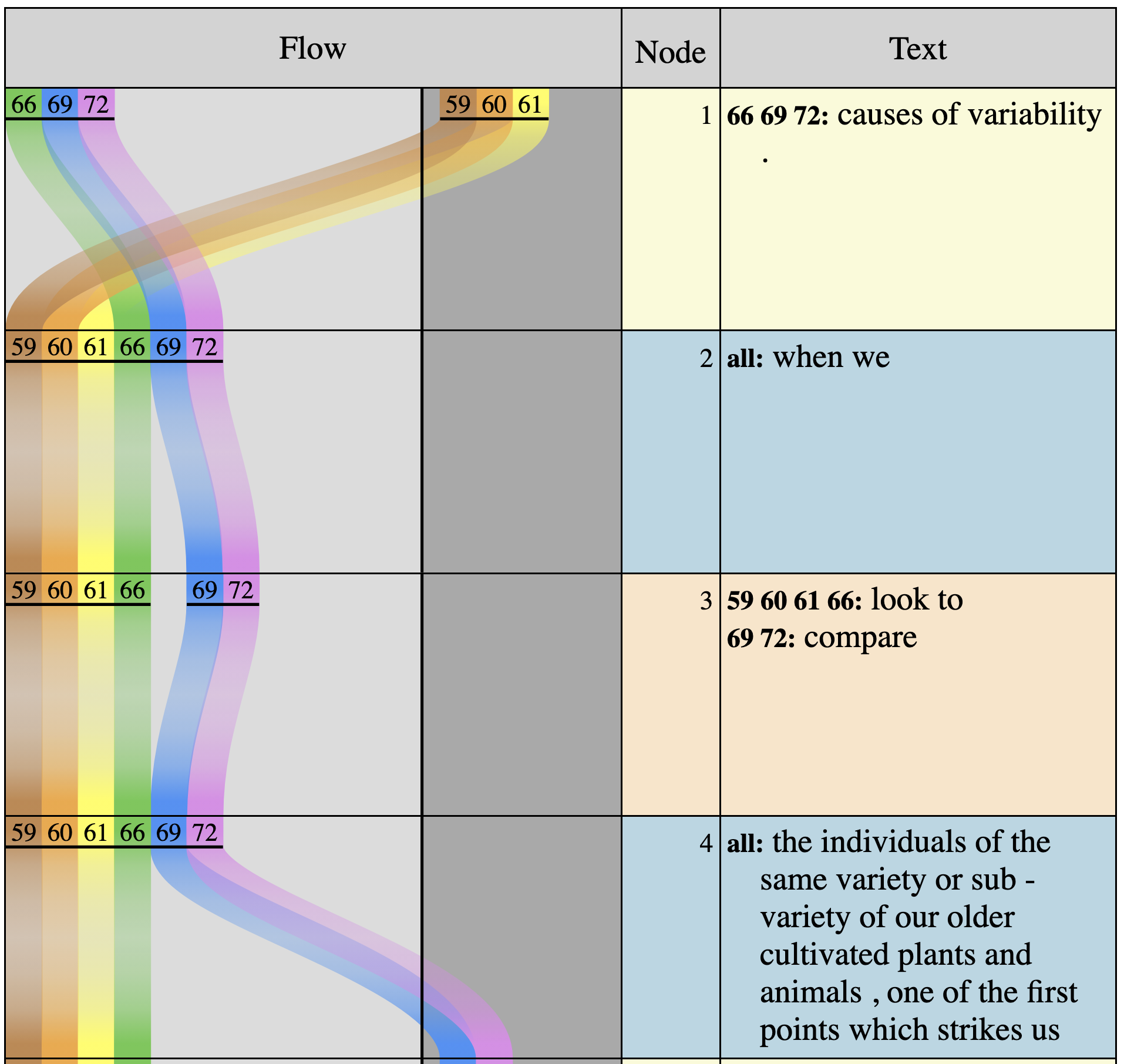

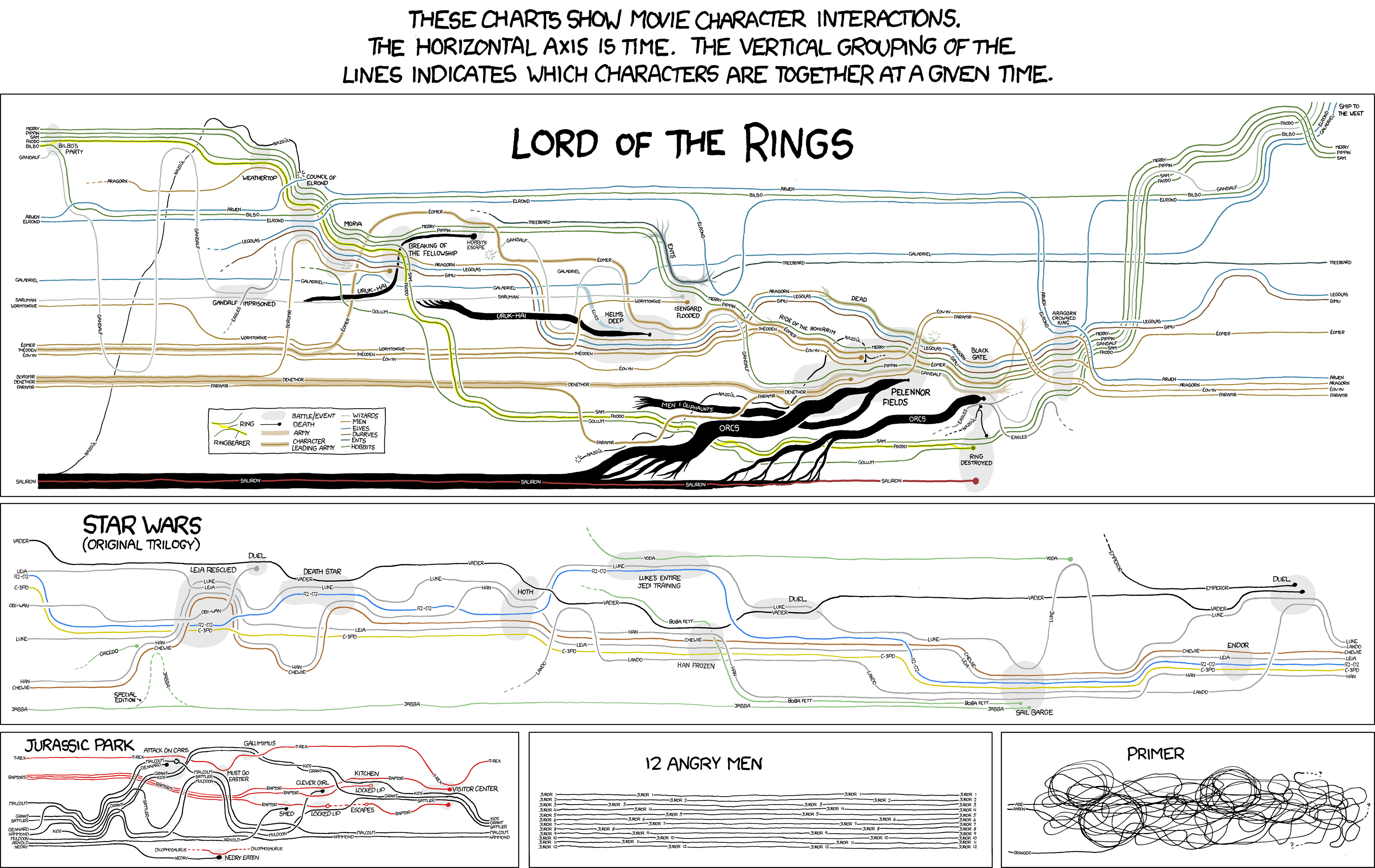

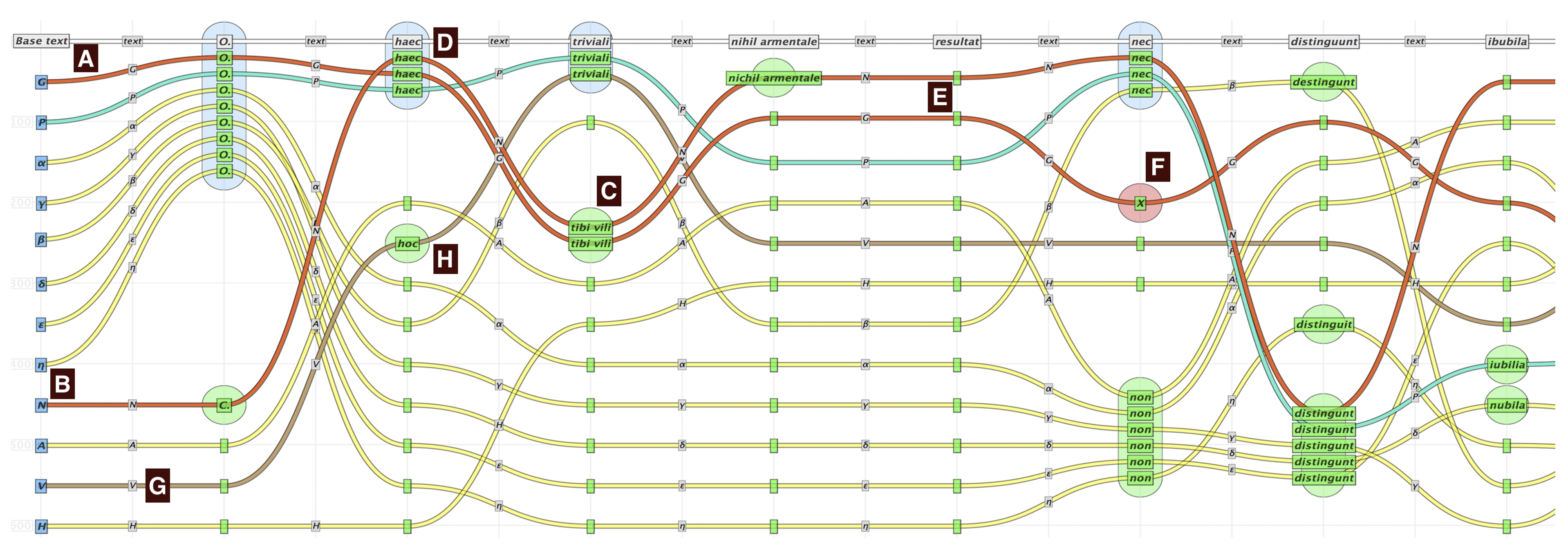

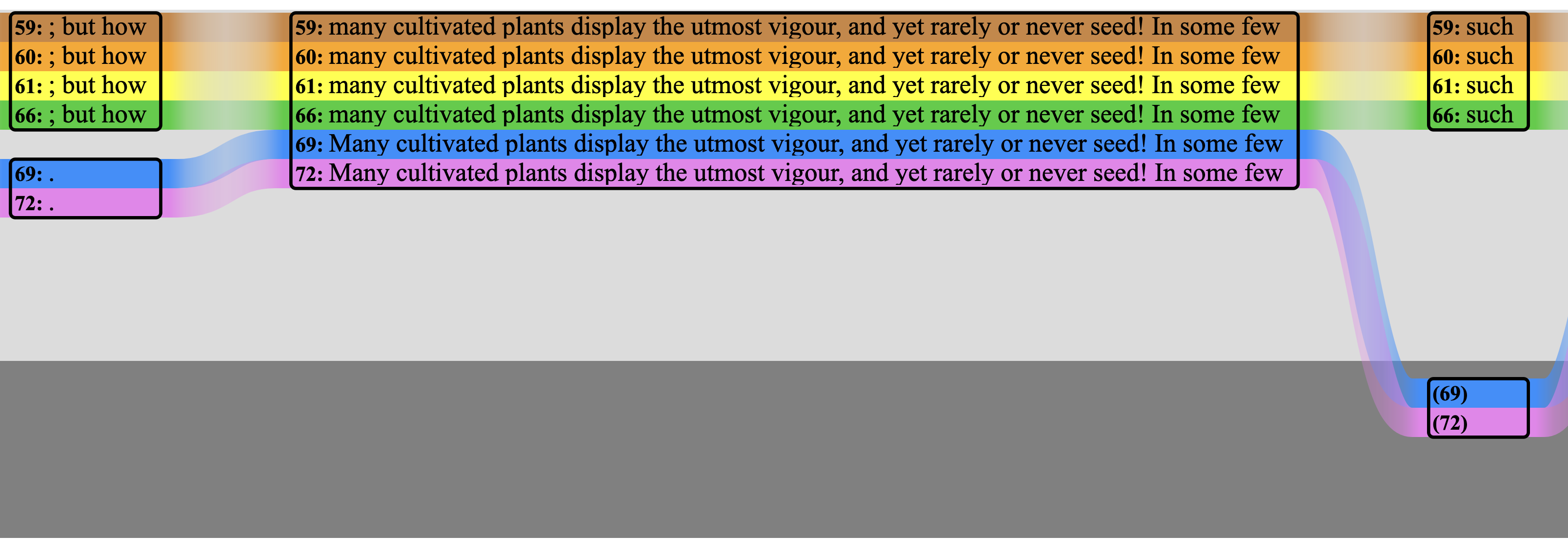

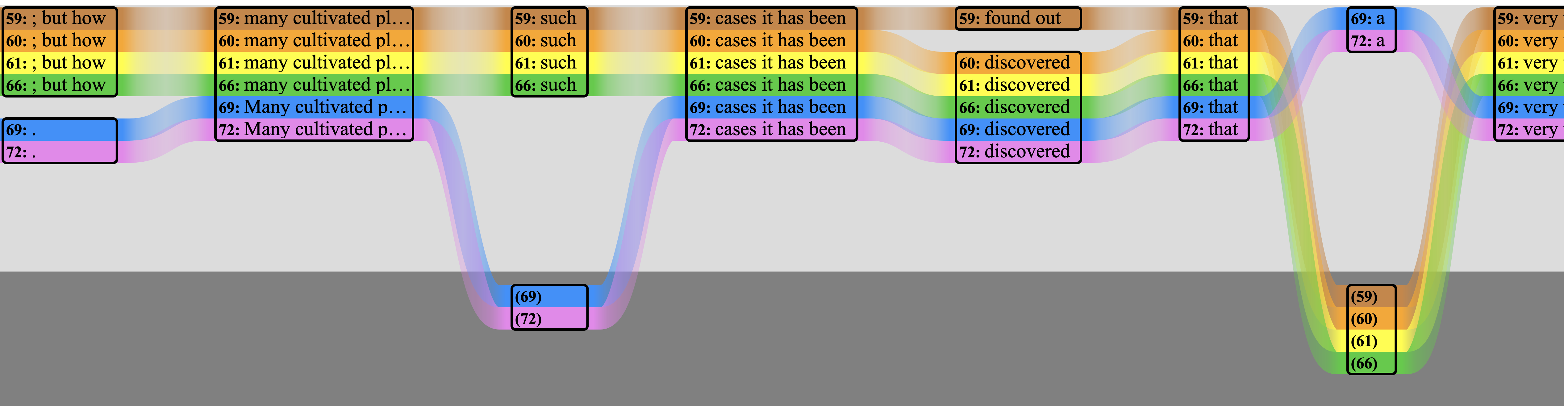

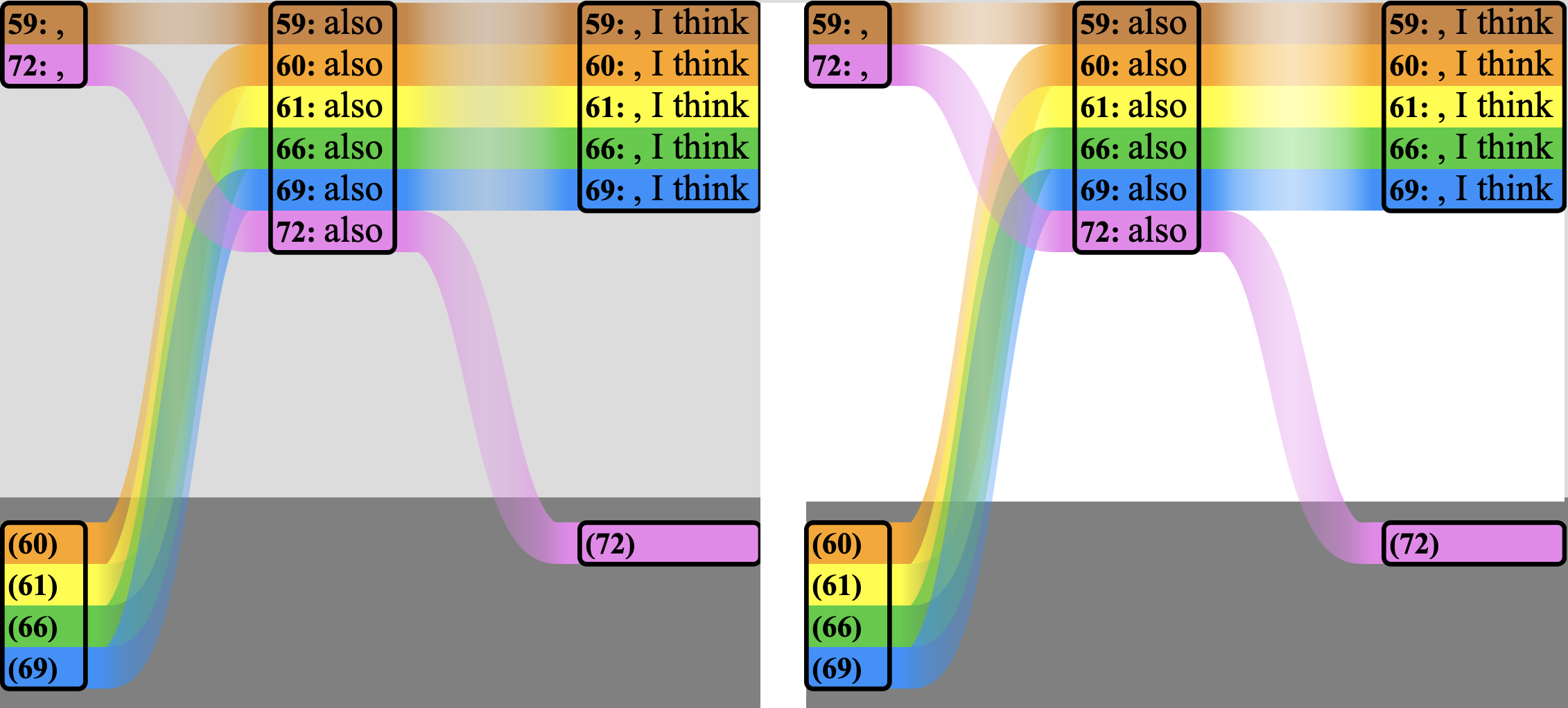

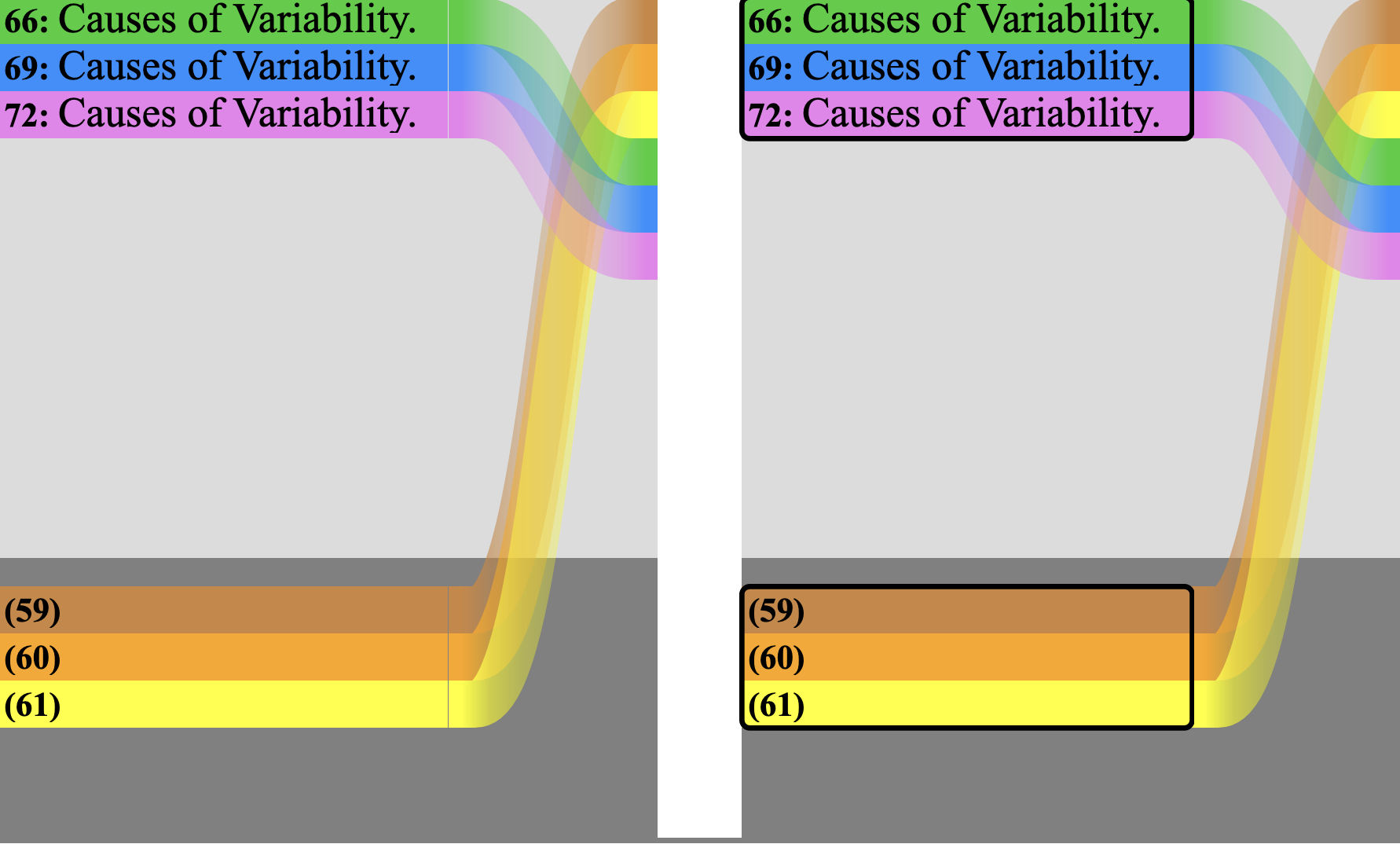

Alignment ribbon. The alignment ribbon is an original collation visualization that seeks to combine the textual clarity of an alignment table with the graphical insights of variant-graph and storyline visualizations. The alignment ribbon is implemented as the SVG output of a Scala program that ingests plain-text input, performs the alignment, and creates the visualization. The output relies on SVG and SVG-adjacent features that are not always, at least in our experience, widely used, and that enable us to produce the visualization we want without compromise and without dependence on potentially fragile third-party visualization libraries or frameworks. The third major section of this report describes the alignment ribbon and its implementation, with particular attention to those SVG and SVG-adjacent features.

About textual collation

This section introduces the research motivation and context for visualizing textual collation. Subtopics explain why collation matters to textual scholars, how computational philologists have engaged with machine-assisted collation in the past, and how our current work attempts to improve on previous implementations, including our own. Because it would not be appropriate or realistic to incorporate a comprehensive tutorial on textual collation in this report, this introductory section provides only a high-level overview of the topic.

Textual collation relies on visualization in at least two different but overlapping contexts. First (in workflow order), visualization can help ensure that the output of a collation process will be intelligible to the developers who must evaluate and measure the results of their implementation as they seek to improve it. Second, visualization is one way that end-user researchers can understand and communicate the results of the textual analysis that serves as the focus of their philological explorations. Visualizations tell a story, and they are valuable for their ability to summarize complex information concisely, a result that they achieve by, among other things, focusing attention on some features by excluding or otherwise deemphasizing others. This selectivity means that every visualization entails choices and compromises with respect to both what to include and how to represent it. It should not be surprising that different visualizations may be useful for different purposes, involving not only the different (even if overlapping) needs of developers and end-user philologists, but also diverse requirements within each of those communities.

The terms collation and alignment are often used interchangeably to refer to the organized juxtaposition and comparison of related textual resources. In this report we distinguish them, adopting the terminology of the Gothenburg Model (see below), where alignment refers narrowly to the identification of philologically significant moments of agreement and variation in textual resources and collation refers to a larger workflow that includes pre-processing to assist in the alignment, the alignment itself, and post-processing to present the alignment results to the researcher in an accessible form.[1]

Why textual scholars care about collation

Philologists refer to manuscript copies of the same work as textual

witnesses, and it is rare for two witnesses to the same work to agree

in all details. If textual scholars were to discover four witnesses to the same work

that

agreed in most places, but where the moments of disagreement always fell into the

same

pattern (e.g., witnesses A and B typically share one reading at locations where witnesses

C

and D share a different reading), they would try to explain how those variants arose

as new

textual witnesses were created by copying (imperfectly) earlier ones. Absent specific

reasons to believe otherwise, a common and sensible working hypothesis is that one

of two or

more variant readings continues an earlier version of the text and other readings

have

diverged from it because they have incorporated deviations introduced, whether accidentally

or deliberately, during copying. Crucially, philologists assume, unless there is good

reason

to believe otherwise, that coincidences are unlikely to arise by chance, that is,

that the

scribes of A and B (or of C and D) did not independently introduce identical changes

in

identical places during copying. Willis 1972 (cited here from Ostrowski 2003, p. xxvii) explains the rationale for this assumption by means

of an analogy: If two people are found shot dead in the same house at the same time,

it is indeed possible that they have been shot by different persons for different

reasons,

but it would be foolish to make that our initial assumption

(p. 14).

Variation during copying may arise through scribal error, such as by looking at a source manuscript, committing a few words to short-term memory, and then reproducing those words imprecisely when writing them into a new copy. Scribes may also intervene deliberately to emend what they perceive (whether correctly or not) as mistakes in their sources. A scribe copying a familiar text (such as a monk copying a Biblical citation in a larger work) might reproduce a different version of the text from memory. A scribe who sees a correction scribbled into the margin by an earlier reader may regard it as correcting an error, and may therefore decide to insert it into the main text during copying. These are only a few of the ways in which variation may arise during copying, but whatever the cause, the result is an inexact copy. The witnesses that attest an earlier reading at one location will not necessarily attest earlier readings at other locations; each moment of variation requires its own assessment and decision. The is no justification in automatically favoring the majority reading because a secondary reading may be copied more than a primary one; this means that competing readings must be evaluated, and not merely counted. For reasons explained below, there is also no justification in automatically favoring the oldest manuscript; both older and younger manuscripts may continue the earliest readings.

Scholars who encounter textual variation often care about identifying the readings that might have stood in the first version of a work, which, itself, may or may not have survived as a manuscript that is available to the researcher. Textual scholars may also care about the subsequent history of a work, that is, about which changes may have arisen at different times or in different places across the copying tradition. The process of comparing manuscript variants to construct an explanatory hypothesis about the transmission of the text is called textual criticism, and a necessary starting point for that comparison involves finding the moments of agreement and disagreement among manuscript witnesses. Identifying the locations to be compared closely across the manuscript witnesses is the primary task of collation.

Three challenges of collation

Collating a short sentence from a small number of witnesses is simple enough that we can perform the task mentally without even thinking about how the comparison proceeds. Consider the sentence below, taken from Charles Darwin’s On the origin of species as attested in the six British editions published during the author’s lifetime:

Table I

From Charles Darwin, On the origin of species

| 1859 | The | result | of | the | various, | quite | unknown, | or | dimly | seen | laws | of | variation | is | infinitely | complex | and | diversified. | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1860 | The | result | of | the | various, | quite | unknown, | or | dimly | seen | laws | of | variation | is | infinitely | complex | and | diversified. | |

| 1861 | The | result | of | the | various, | quite | unknown, | or | dimly | seen | laws | of | variation | is | infinitely | complex | and | diversified. | |

| 1866 | The | result | of | the | various, | quite | unknown, | or | dimly | seen | laws | of | variation | is | infinitely | complex | and | diversified. | |

| 1869 | The | results | of | the | various, | unknown, | or | but | dimly | understood | laws | of | variation | are | infinitely | complex | and | diversified. | |

| 1872 | The | results | of | the | various, | unknown, | or | but | dimly | understood | laws | of | variation | are | infinitely | complex | and | diversified. |

The representation above is called an alignment table, and we’ll

have more to say about alignment tables as visualizations below. For now, though,

what

matters is that an alignment table models shared and different readings across witnesses

as

a sequence of what we call alignment points, represented by columns in

the table.[2] Alignment points can be described as involving a combination of

matches (witnesses share a reading), non-matches

(witnesses contain readings, but not the same readings), and indels

(insertions / deletions

, where some witnesses contain readings and some

contain nothing). Because there may be more than two witnesses in a textual tradition,

these

three types of pairwise relationships correspond to four full-depth

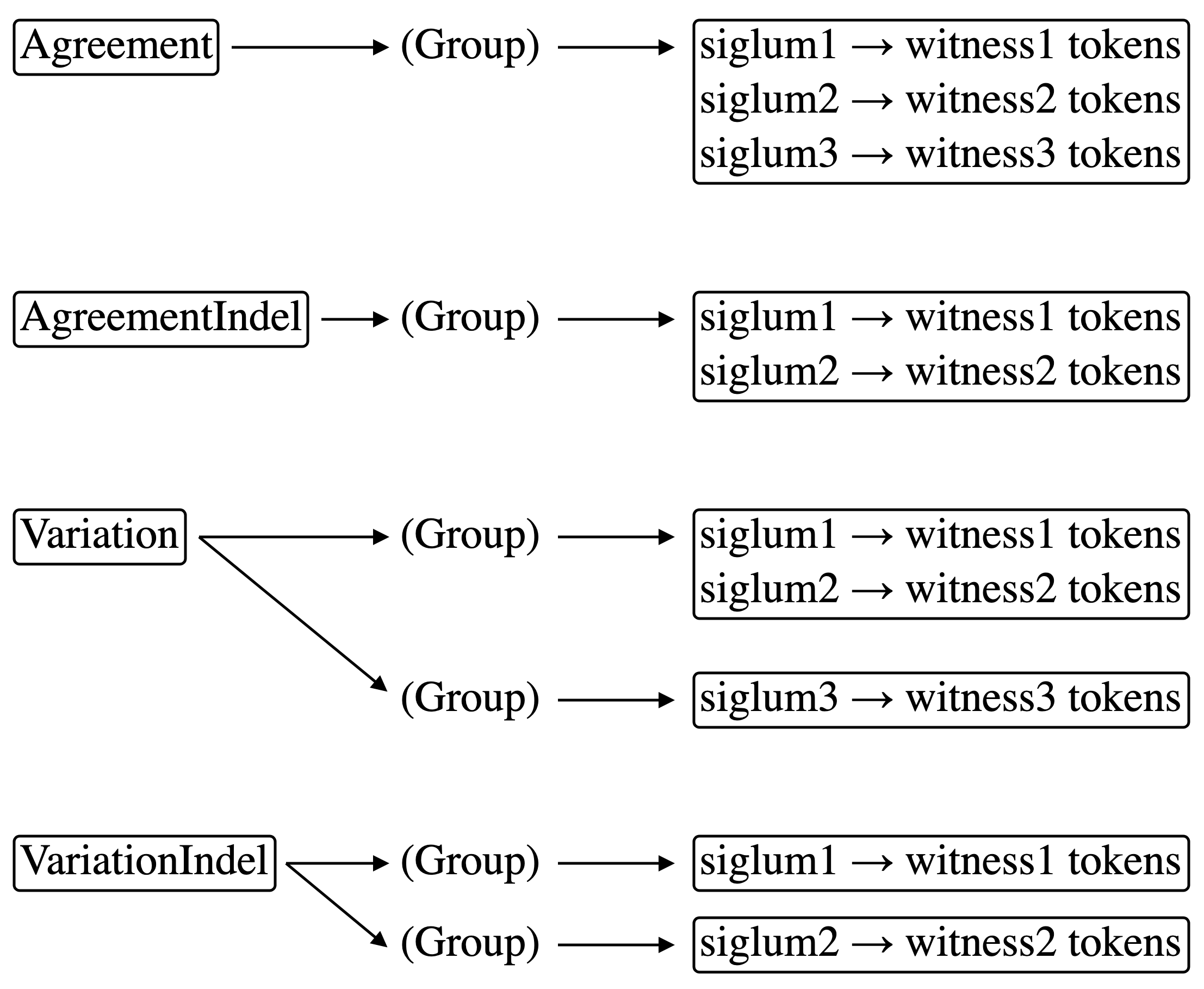

(all-witness) relationship types, which we call:

-

Agreement: All witnesses are present and have the same value

-

AgreementIndel: Not all witnesses are present, but those that are present all have the same value

-

Variation: All witnesses are present, but they do not all have the same value

-

VariationIndel: Not all witnesses are present, and those that are present do not all have the same value

It is easy to see in the alignment table example above that 1) the versions of this sentence in the first four editions are identical to one another; 2) the same is true of the last two; and 3) the two subgroups agree with each other more often than not (all witnesses agree in fourteen of the nineteen textual columns). We happen to know the dates of these editions (something that is rarely the case with medieval manuscript evidence), but even without that chronological metadata we could hypothesize that the two subgroups go back to a common source (which explains the predominant agreement across the entire set of witnesses) and that at each moment of divergence either one was created by editing the other or both were created by editing a common ancestor that is not available for direct study. As those options demonstrate, identifying the patterns of agreement and disagreement among witnesses is only part of the work of the philologist, who will also want to decide the direction of any textual transmission. In this case we have metadata evidence that the original reading is the one from the first edition (1859), which is reproduced without change in the next three (1860, 1861, 1866), and that Darwin then revised the text for the 1869 edition and reproduced those revisions in 1872. However, as mentioned above, comprehensive dating information is rarely available with medieval manuscripts and we cannot be confident that the same witness or group of witnesses will always (that is, at all locations in the text) continue the earliest reading.

Most real-world collations do not tell as clear a story as the one above, and there are three types of common textual phenomena that pose particular challenges for aligning textual witnesses in order to understand the relationships among them:[3]

-

Repetition: The distribution of words in a text in many languages converges, as the length of the text grows, on a distribution called Zipf’s Law:

[T]he most common word occurs approximately twice as often as the next [most] common one, three times as often as the third most common, and so on

. (Zipf’s Law (Wikipedia); text in square brackets has been added) This means that the repetition of words is to be expected, and part of the task of collating textual witnesses involves choosing which instance of a repetition to align with which other instances. In the alignment table above (Table I), the wordof

occurs twice in each witness and it is easy (at least for a human) to see which instances to align with which others. That decision is more challenging when the number of repetitions increases and the amount of context around them does not tell as clear a story as the example above, whereof

occurs within the three-word phrasesresult of the

andlaws of variation

. -

Transposition: Scribes may change the order of words (or larger units) while copying. For example, in another location Darwin writes

will be hereafter briefly mentioned

in the first four editions andwill hereafter be briefly mentioned

in the last two. This is an adjacent transposition; transposition may also occur at a distance, such as when an author moves a sentence or paragraph or larger section from one location in a work to another across intermediate text (which may or may not be shared by eome or all of the witnesses). Distinguishing actual editorial transposition from the accidental appearance of the same content in different locations in different witnesses is further complicated by repetition. We might expect editorial transposition (as contrasted to the accidental appearance of the same word in different contexts in different witnesses) to be more likely over short distances than long ones, but translating that vague truism into rules that a computer can apply usefully can be challenging. -

Number of witnesses: It is relatively easy to compare two witnesses because the moments of comparison have only two possible outcomes: assuming we have successfully negotiated repetition and transposition, the readings at each location are either the same or not the same.[4] Comparing three readings has five possible outcome groupings: three versions of two vs one, one where all agree, and one where all disagree. Comparing four things has fifteen possible outcomes: four versions of three vs one, three of two vs two, six of two vs one vs one (three groups), one with complete agreement and one with no agreement. Even without expanding further it is easy to see that as the number of witnesses increases linearly the number of possible groupings increases at a far greater rate.[5] Computers often deal more effectively than humans with large amounts of data, but the machine-assisted alignment of large numbers of long witnesses typically requires heuristic methods because even with computational tools and methods it is not realistic to evaluate all possible arrangements and combinations of the witness data.

The Gothenburg Model of textual collation

The Gothenburg Model of textual collation emerged from a 2009 symposium within the

frameworks of the EU-funded research projects COST Action 32 (Open scholarly

communities on the web

) and Interedition, the output of which was the

modularization of the study of textual variation into five stages:[6]

-

Tokenization: The witnesses are divided into units to be compared and aligned. Most commonly the alignment units are words (according to varying definitions of what constitutes a word for alignment purposes), but nothing in the Gothenburg Model prohibits tokenization into smaller or larger units.

-

Normalization: In a computational environment the tokens to be aligned are typically strings of Unicode characters, but a researcher might regard only some string differences as significant for alignment purposes. At the Normalization stage the collation process creates a shadow representation of each token that neutralizes features that should be ignored during alignment, so that alignment can then be performed by comparing the normalized shadows, instead of the original character strings. For example, if a researcher decides that upper vs lower case is not significant for alignment, the normalized shadow tokens might be created by lower-casing the tokens identified during the Tokenization stage.[7]

-

Alignment: Alignment is the process of determining which normalized shadow tokens in the different witnesses should be compared to one another. It is the alignment process that identifies the moments of agreement and moments of variation recorded in the columns of the alignment table example above.

-

Analysis: Analysis refers to adjustments introduced into the alignment after completion of the Alignment stage. These adjustments may involve human intervention to resolve decisions that cannot be automated fully. More interestingly, though, the Analysis stage refers also to automated adjustments. For example, the Alignment stage of the current Python version of CollateX recognizes agreement only when the normalized shadow tokens agree exactly, but that version of CollateX also allows for near matching (that is, fuzzy matching). Near matching is implemented as part of the Analysis stage, which means that it is applied only to resolve alignment uncertainties that cannot be decided during the preceding Alignment stage.

-

Visualization: Visualization might more accurately be called output or serialization, since it refers to outputting the result of the collation process for subsequent viewing (literal visualization) or further downstream processing. CollateX supports several output formats, including the alignment table illustrated above and others discussed below.

One motivation for the development of the Gothenburg Model is that the work performed by the five components is likely to be important for any computational engagement with textual variation, but the way the different stages are implemented and applied may vary according to language, text, and research question, as well as according to programming language and paradigm. The modular structure that constitutes the principal original contribution of the Gothenburg Model to collation theory and practice makes it possible to customize one part of the process without having to rewrite the others. In other words, the Gothenburg Model regards collation as a processing pipeline, where the five stages happen in order, the output of each serves as the input to the next, and no stage has to know about the inner workings of any other.[8]

Why order-independent multiple-witness alignment matters

The alignment of manuscript witnesses is an example of a more general process known as sequence alignment, which is well developed in bioinformatics, where it is fundamental to comparing gene sequences. Although there are domain-specific differences between textual and genetic sequencing (see Birnbaum 2020, §1.2, p. 46 and Schmidt and Colomb 2009 §2.2, p. 500), the difference between paired vs multiple-witness alignment is fundamental to both. Specifically, an algorithm that can be proven to create an optimal alignment of two sequences has been in use for more than fifty years (Birnbaum 2020 explores it within an XSLT context), but the alignment of more than two sequences is considered to be an NP-hard problem, which means—to simplify—that no scalable (that is, implementable) algorithm is known that can ensure the optimal alignment of more than two witnesses.

The absence of an algorithm that ensures an optimal alignment of multiple witnesses has required work-arounds, the most common of which is progressive alignment. Progressive alignment begins by aligning two witnesses (for which an algorithm exists), regards that alignment as a sort of super-witness, and then aligns it against a third witness, etc. One type of progressive alignment begins by merging two singleton witnesses and then incorporates the remaining witnesses into the mixture one by one. If the result of the initial merger of two singleton witnesses is modeled as a graph, subsequent mergers each combine one graph with one singleton. We refer to this as simple progressive alignment. A more powerful approach to progressive alignment, which we call complex progressive alignment, also permits mergers that do not involve any singletons, so that, for example, a first step might merge singleton witnesses A and B to create graph AB; a second step might merge singleton witnesses C and D to create graph CD; and a third step might merge graphs AB and CD to create graph ABCD. The implementation of complex progressive alignment is more challenging than implementing simple progressive alignment, and current release versions of CollateX (Java and Python) employ the simple type of progressive alignment, arriving at an alignment of all witnesses by incorporating one singleton at a time.

The reason progressive alignment is not wholly satisfactory is that, as a greedy workflow, it is subject to order effects; once a super-witness has been formed it cannot be broken apart, which means that progressive alignment may produce different results according to the order in which the witnesses are incorporated.[9] This property is undesirable because the optimal alignment of multiple witnesses from a philological perspective cannot depend on the order in which arbitrary researchers at an arbitrary moment happen to touch the witnesses. An alignment process might improve the outcome of progressive alignment by incorporating the witnesses in an order that reflects the copying history, based on a heuristic scan and organization of the evidence into a guide tree, but the benefit of that strategy depends on the informational quality of the scan, converging on a reductio ad absurdum workflow that must perform and assess the quality of the entire computationally expensive alignment with all possible permutations of the witnesses in order to determine the optimal order. More insidiously, because manuscript witnesses may incorporate and merge information from multiple sources, the optimal order for incorporating witnesses into a progressive alignment in one place may not be the optimal order in a different place.

What the collation of more than two witnesses requires, then, is order-independent multiple-witness alignment, that is, a method that considers all evidence from all witnesses simultaneously when making alignment decisions. As noted above, the current Java and Python versions of CollateX perform progressive alignment, and the authors of this report are in the process of implementing a new alignment algorthm, to be incorporated into a future release of CollateX, that will perform order-independent multiple-witness alignment. Because order-independent multiple-witness alignment is NP-hard, our new method will necessarily rely on heuristics, including limited progressive-alignment methods, but it is intended to reduce the susceptability of the alignment to order effects, even if it it not able to eliminate those effects entirely.

Our development of a new alignment algorithm provided a context for us to review both the model underlying the way alignment is performed and recorded in CollateX and the way the results of the alignment process are exported and presented for visualization.

Modeling and visualizing alignment

The examination below of existing visualizations of textual alignment rests on the following assumptions:[10]

-

Visualizations are selective views of data, which means that different visualizations deliberately include and exclude different features and they foreground different aspects of the features that they do include. Our discussion below does not attempt to identify

the best visualization

in any absolute way because different visualizations may tell different useful stories about the same original data, and a visualization that is more effective when telling one story may be less so when telling another.[11] -

The most useful data structures for modeling data to support computational processing may not be the most useful for visualization, that is, for telling a story to humans with visual methods. For example, a model for processing may prioritize eliminating redundancy, while a visualization may deliberately include redundant information for rhetorical purposes. The focus of our discussion below prioritizes the communicative consequences of different visual expressions of the output of a collation process.

-

The same data structure may be expressed (serialized, represented) in different ways. For example a graph can be understood mathematically as a set of nodes (also called vertices) and a set of edges (also called arcs), which means that a graph can represented fully and correctly by listing the members of those sets. Such lists are not easy for humans to understand, and when we speak of graphs, we typically have a more … well … graphic image in mind. The graphic renderings of variant graphs below (Variant graph) use SVG to present the nodes and edges of a graph in ways that make the relationships among them visually perspicuous. The discussion below explores the communicative effects of different visual representations of variation.

Textual visualizations

Critical apparatus

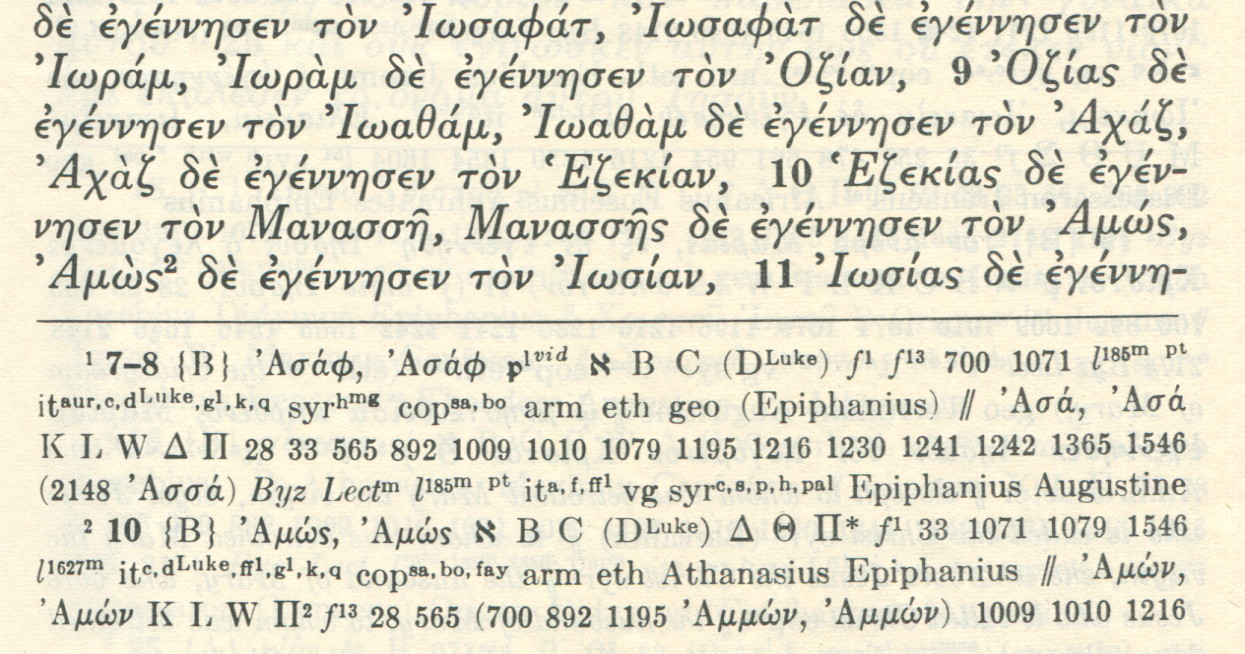

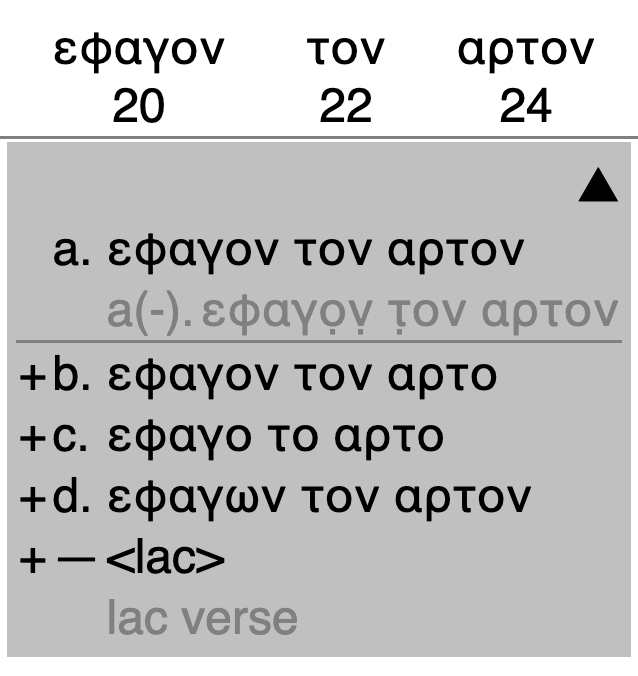

The most familiar visualization of variation for philologists is likely to be the critical apparatus, or apparatus criticus, a textual presentation that records variation across witnesses in notes (usually footnotes).[12] The image below of a critical apparatus is from the United Bible Societies edition of the Greek New Testament:[13]

Figure 1: Greek New Testament (text and apparatus)

Reproduced from Greek New Testament, p. 1.

The continuous reading in larger print, at the top, represents the editors’ judgment about the establishment of the text. This means that in situations where the textual evidence is inconsistent, the editors represent, in the continuous reading, what they believe is most likely to have appeared in a source ancestral to all surviving copies. The editors of this Greek New Testament do not regard any single extant manuscript as always attesting the best reading; that is, there is no best witness. As a result, the main reading version does not correspond to any single witness in its entirety, representing, instead, what is sometimes referred to as a dynamic critical text.[14] Whether a particular work has a single extant best witness vs whether a dynamic critical text is a better representation of the tradition is an editorial decision.

Some editions that draw on multiple sources, especially where the chronology of the

editorial changes is known, may focus not on establishing an earliest text, but on

facilitating the comparative exploration of the evidence. The information from Darwin’s

On the origin of species displayed as an alignment table in Table I, above, is published digitally with a critical apparatus in

Barbara Bordalejo’s Online variorum of Darwin’s Origin of

species

at

http://darwin-online.org.uk/Variorum/1859/1859-7-dns.html. Both the table

above and the online Variorum organize and present the readings from the six British

editions of the work published during Darwin’s lifetime. This type of evidence does

not

ask the editor to establish a dynamic critical text; the research question raised

by this

type of tradition and facilitated by a edition of multiple witnesses is not What is

likely to have stood in the original?

(we know that the 1859 edition is Darwin’s

first publication of his text), but What did Darwin change in subsequent editions,

and when, and how should we understand those changes?

[15]

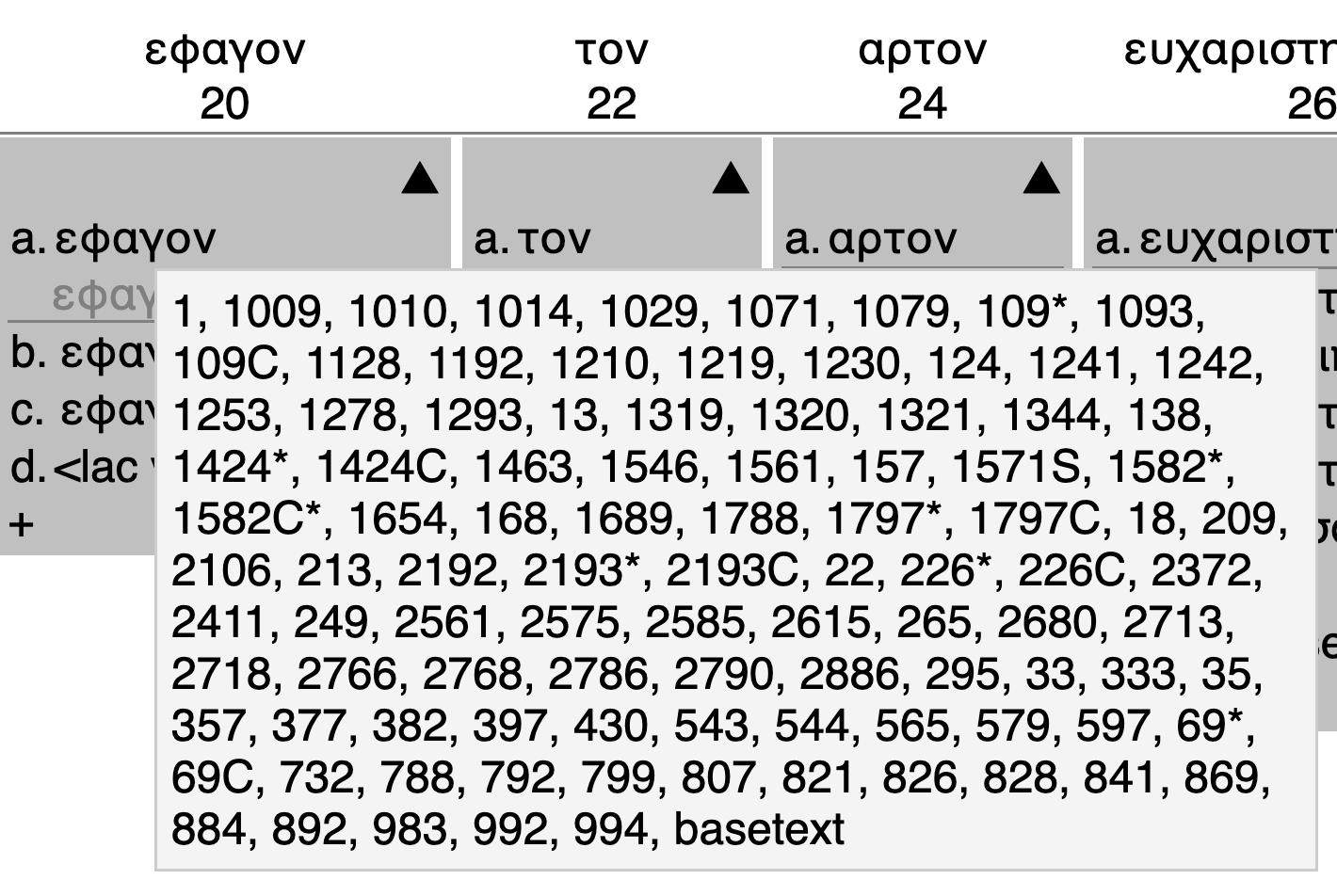

The critical apparatus in the Nestle-Aland New Testament is selective, that is, it reports only variants that the editors consider significant for understanding (and, in the case of the United Bible Societies publication, translating) the text. Reasonable persons might disagree, at least in places, about what constitutes significant vs insignificant variation, about which see below.[16] Furthermore, the apparatus mentions explicitly only readings that disagree with the editors’ preferred reading; this is called a negative apparatus, and it implies that any witness not listed in the apparatus agrees with the reading in the critical text. A negative apparatus that conforms to this assumption is informationally equivalent to a positive apparatus, that is, one that explicitly lists the witnesses that agree with the preferred reading. These and other parsimonious editorial preferences may be unavoidable in the case of the Greek New Testament, which is preserved in and attested by an unmanageably large number of witnesses.[17]

Understanding a critical apparatus is challenging for new users because the notational

conventions prioritize concision, but editions come with introductions that explain

the

meaning of the manuscript identifiers (called sigla) and other

special editorial conventions. In the example above, the second apparatus entry

(introduced by a small, superscript numeral 2

) says that, with respect to

verse 10, there is some degree of doubt

(the B in curly braces is on a scale from virtual certainty

for

A to a high degree of doubt

for

D) about the decision to select ᾽Αμώς, ᾽Αμὼς as the preferred reading (in the last two lines of the main

text); that ᾽Αμώς, ᾽Αμώς is attested in witnesses

א, B, C, etc.; that witnesses K,

L, W, etc. attest

instead ᾽Αμών, ᾽Αμών; and that witnesses 700, 892, and 1195 attest ᾽Αμμών, ᾽Αμμών,

which the editors regard as a variant spelling of the version with a single μ.

The critical apparatus has long been the predominant method of reporting textual variation in paper publication; it is valuable for its extreme concision, which is especially important, for economic reasons, in the context of publication on paper. Additionally, because a critical apparatus groups sigla that share a reading, it foregrounds which witnesses agree with one another at a particular moment in the text. The concision is undeniably cognitively alienating for new users, but philologists quickly become reasonably comfortable with it, at least when working with texts with which they are familiar. At the same time, the critical apparatus as a representation of textual transmission comes with at least two severe informational limitations:

-

Editors typically record only what they consider textually significant variants in an apparatus. Removing distractions that do not contribute to understanding the history of the text has obvious communicative merit, but what happens when reasonable persons disagree about what constitutes significant vs insignificant variation? The complete omission from the apparatus of variation that the editor considers insignificant makes it impossible for users to assess and evaluate the editor’s decisions and agree or disagree with them in an informed way. It is, to be sure, the editor’s responsibility to make critical decisions that interpret the evidence for users, but the complete exclusion of some variants as insignificant is a different editorial action than deciding which version goes into the main reading text and which versions are relegated to the apparatus as variants. Ultimately, excluding variants that some readers might reasonably consider textually significant even when the editors do not compromises the documentary value of the edition. Furthermore, in the (common) case of a negative apparatus, the omission of a witness from the apparatus becomes ambiguous: either an omitted witness agrees with the preferred reading in all details or it disagrees with it, but in a way that the editor regards as not significant. Insofar as editions sometimes rely on manuscript evidence that is not otherwise easily accessible to users, an editor’s decisions about the omission of variants are not verifiable.

-

For many the principal goal of an edition is to establish an authoritative text, that is, one that reconstructs (or, perhaps more precisely, constructs a hypothesis about) the earlier contents of the text by eliminating changes that were introduced, whether accidentally or intentionally, during copying.[18] The critical apparatus prioritizes its focus on deviation from a hypothetical best text at individual moments by gathering the variants for such moments in separate critical annotations. That focus serves the purposes of foregrounding the preferred readings and documenting variation, but one side-effect is that it becomes challenging to use the edition with the goal of reading a particular witness consecutively, since the text of that witnesses is sometimes in the apparatus and sometimes implicitly in agreement with the main text.

Digital editions based on a critical apparatus can mitigate this complication by allowing the reader to select any witness as a copy text (a primary witness, presented continuously in its entirety, in the place of a dynamic critical text) and display readings from other witnesses as variants. This approach can be seen in, for example, Darwin online (see p. 1 at http://darwin-online.org.uk/Variorum/1859/1859-1-dns.html) and the Frankenstein Variorum (see p. 1 at https://frankensteinvariorum.org/viewer/1818/vol_1_preface/).

The centuries-long tradition of recording variation in a critical apparatus ensures that it will continue to be the preferred representation for some philologists, especially if their focus is on presenting a hypothesis about what is likely to have stood in an original text. At the same time, digital editions remove the economics of paper publication from an assessment of the costs and benefits of the concision afforded by the critical apparatus. A critical edition requires a record and representation of variation, but those do not have to be expressed in a traditional, footnoted critical apparatus. Ultimately the critical apparatus is one of several available ways of representing, for human perception and understanding, information about textual variation.

Alignment table

Overview

As explained above, an alignment table is a two-dimensional table that displays the full contents of all witnesses in intersecting rows and columns, where each cell contains either text or nothing. In Table I, above, each row contains all of the words from one witness to the textual tradition and the columns represent alignment points, that is, the columns align words that the editors regard as corresponding to one another across witnesses. If a witness has no reading at a particular location in the tradition the cell for that witness in that column is empty.

An alignment table, such as Table I above, avoids at least two of the limitations of a footnoted critical apparatus:

-

As noted above, reading the full text of a specific witness continuously from start to finish is challenging with a critical apparatus because some of the text is reported implicitly in the main reading text (that is, only because of the absence of any explicitly reported variant), while other text appears in footnoted apparatus entries. Reading a specific witness continuously from this type of edition therefore requires the reader to reassemble the continuous text mentally by identifying and piecing together snippets of the main text and pieces recorded as variants. Furthermore, because the footnoted apparatus prioritizes grouping the sigla of witnesses that share a reading, there is no stable place where a reader can expect to find the variants (if any) that belong to a particular witness. This fragmentation and inconsistency imposes a heavy cognitive load on readers who want to focus their attention on a particular witness.

Unlike a critical apparatus, an alignment table makes it easy to read the continuous text of any individual witness by reading across a row. There is no ambiguity about where to look for the text of a particular witnesses; all text (or gaps in the text) in a specific witnesses will always appear, in order and continuously, in a predictable row.

A limitation of an alignment table that arises as a consequence of making it easy to read the continuous text of any witness is that an alignment table does not represent patterns of agreement among witnesses as clearly as a critical apparatus, which groups the sigla that share a variant. With a small number of witnesses, as is the case with the six editions in Table I, above, it is not difficult to understand at a glance the agreements and disagreements. But especially because different agreement patterns mean that witnesses that agree will not always appear in adjacent rows in an alignment table, recognizing those groupings imposes increasing cognitive costs as the number of witnesses grows.

-

As also noted above, a critical apparatus typically includes only what the editor considers significant variants, which means that a reader cannot know, in the absence of any record of variation, whether there is no variation at a location or whether there is variation but the editor does not regard it as significant.[19] An alignment table, on the other hand, provides the full text of all witnesses, and therefore is naturally able to record variation whether the editor considers it significant or not. This enhances the documentary value of the edition and enables readers to form their own assessments of individual moments of variation, which is not possible in a selective apparatus-based edition that omits entirely variant readings that the editor considers insignificant.

At the same time, an apparatus-based edition with a dynamic critical text, such as the continuous reading text above the apparatus in our example from the Greek New Testament (Figure 1), always reports explicitly the readings that the editor prefers in situations involving variation. That reporting is not automatic in an alignment table that records only the readings from the witnesses, since that sort of table lacks a dynamic record of the editor’s interpretation of moments of variation. For that reason, if an alignment table is to record an editor’s interpretation of variation, it must add that interpretation as a supplement to the transcriptions of the witnesses. This feature is discussed below.

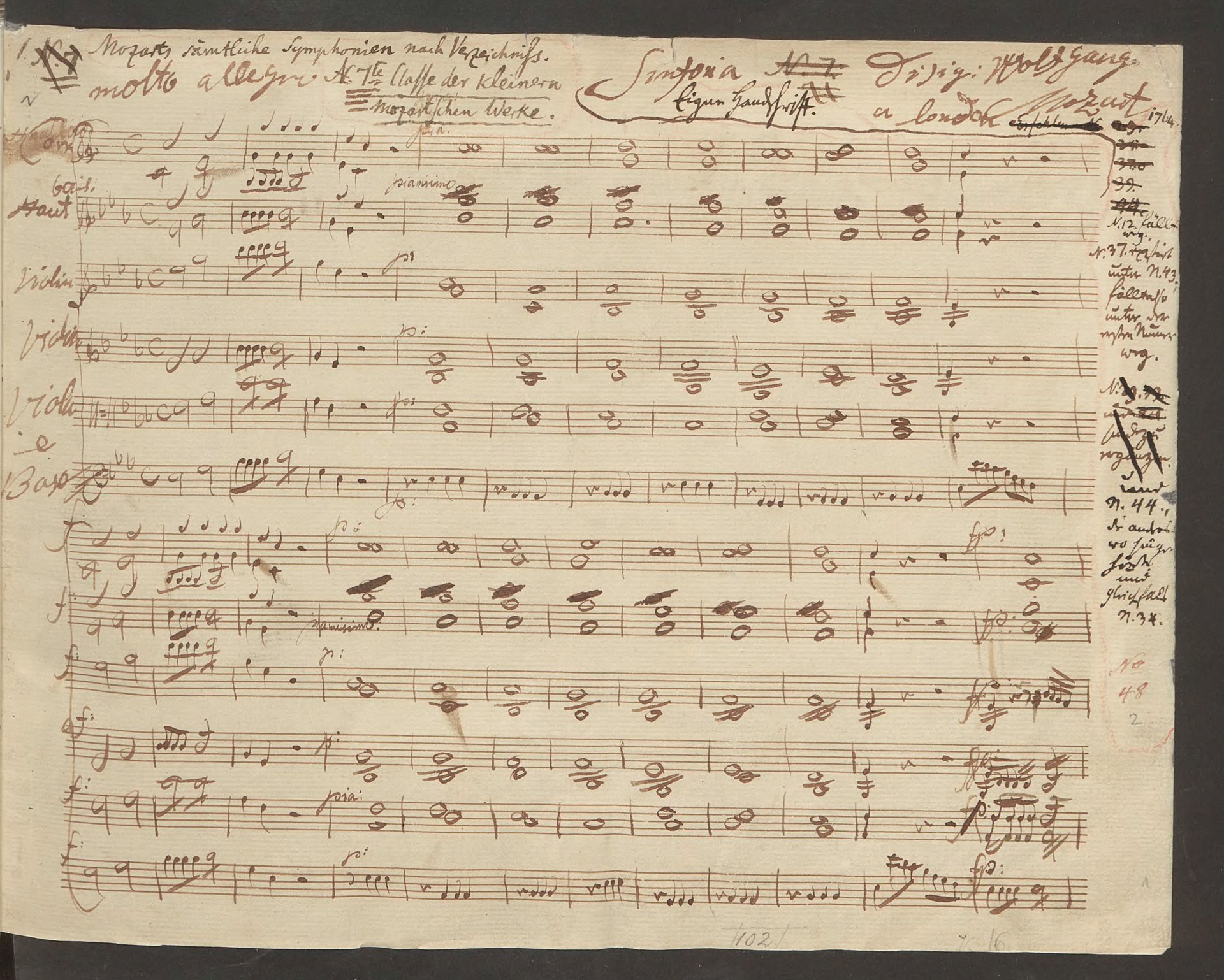

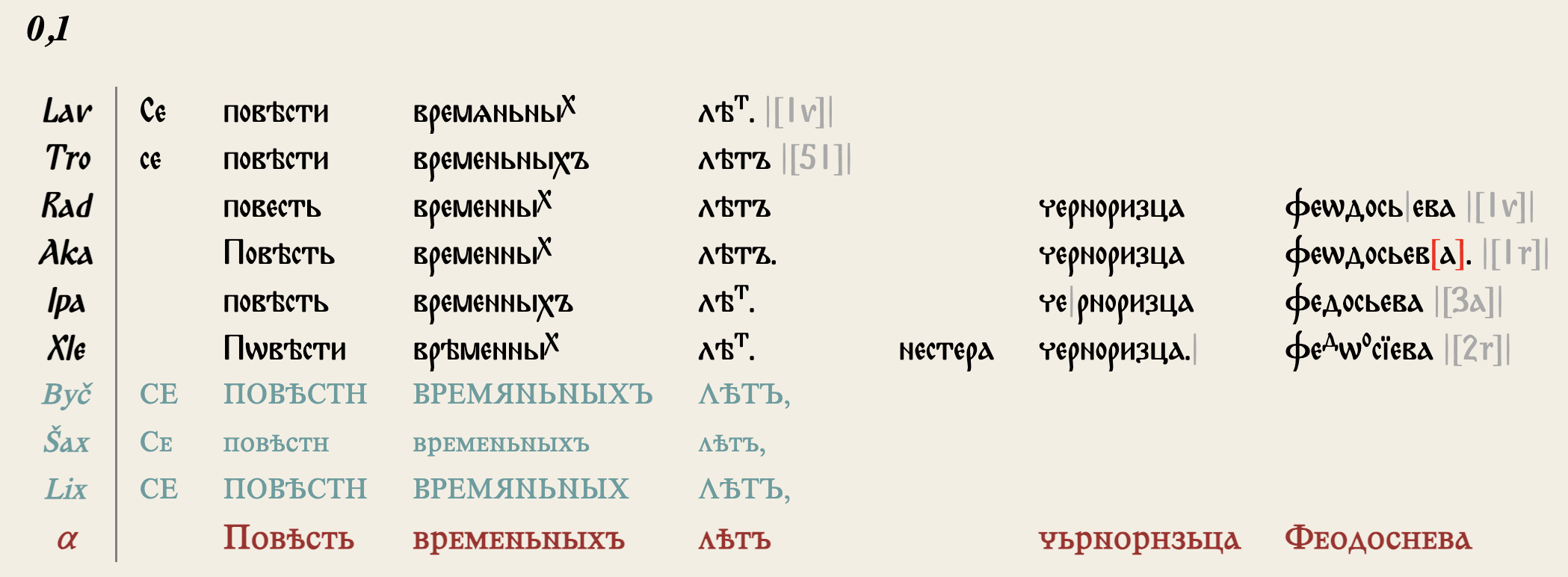

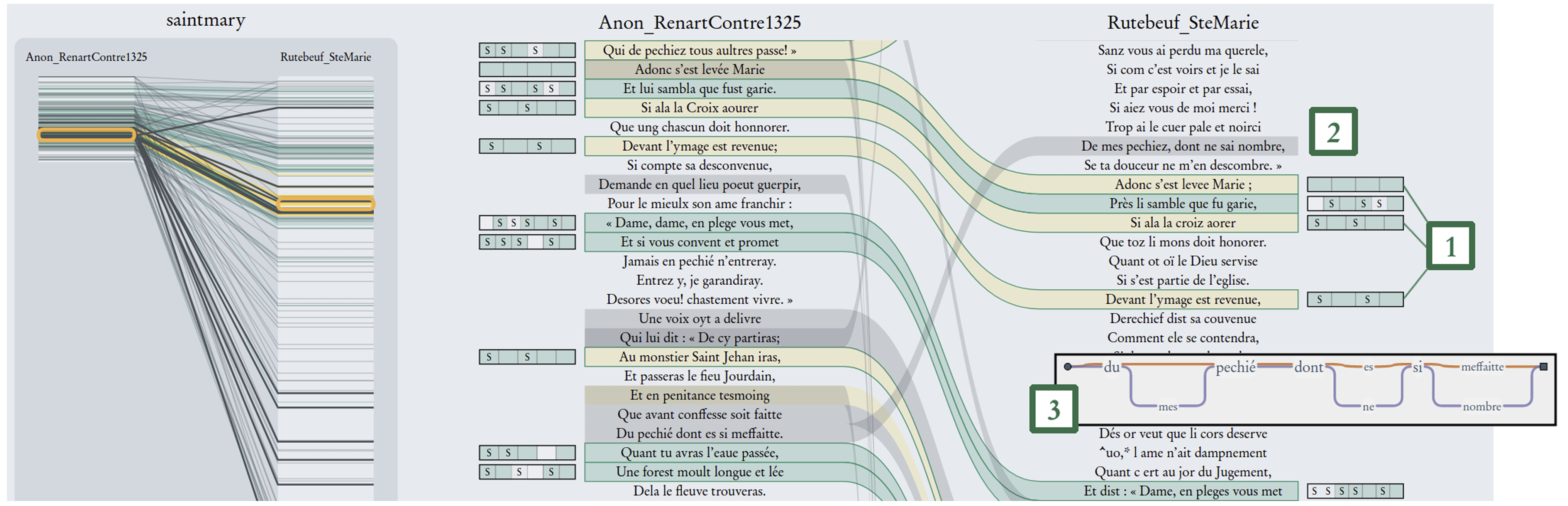

An edition published as an alignment table, such as Ostrowski 2003, is sometimes called an interlinear collation or a Partitur (German for musical score) edition, where the synchronized presentation of the text of all witnesses in parallel rows resembles a conductor’s orchestral score, which represents the different instrumental parts in parallel rows and aligns the measures in columns according to where parts are sounded together. The first image below is the beginning of an autograph manuscript of Mozart’s Symphony No. 1 in E♭ Major (K. 16):[20] The second is from the online edition of Ostrowski 2003.[21]

Figure 2: Mozart Symphony No. 1 in E♭ Major (K. 16), autograph manuscript

Figure 3: Rus′ primary chronicle (interlinear collation)

Both of these visualizations use rows to represent parts (instruments for Mozart, manuscripts and editions for Ostrowski 2003) and columns to represent alignments.

Swapping rows and columns in alignment tables

In the discussion and examples above we describe rows as representing witnesses and columns as representing alignment points, but nothing in the concept of the alignment table prevents the editor from reversing that layout, so that each witness occupies a particular column and the alignment points are represented by the rows. If we swap the rows and columns of Table I, the result looks like the following:

Table II

From Charles Darwin, On the origin of species

| 1859 | 1860 | 1861 | 1866 | 1869 | 1872 |

|---|---|---|---|---|---|

| The | The | The | The | The | The |

| result | result | result | result | results | results |

| of | of | of | of | of | of |

| the | the | the | the | the | the |

| various, | various, | various, | various, | various, | various, |

| quite | quite | quite | quite | ||

| unknown, | unknown, | unknown, | unknown, | unknown, | unknown, |

| or | or | or | or | or | or |

| but | but | ||||

| dimly | dimly | dimly | dimly | dimly | dimly |

| seen | seen | seen | seen | understood | understood |

| laws | laws | laws | laws | laws | laws |

| of | of | of | of | of | of |

| variation | variation | variation | variation | variation | variation |

| is | is | is | is | are | are |

| infinitely | infinitely | infinitely | infinitely | infinitely | infinitely |

| complex | complex | complex | complex | complex | complex |

| and | and | and | and | and | and |

| diversified. | diversified. | diversified. | diversified. | diversified. | diversified. |

These tables are informationally equivalent and each has advantages and disadvantages. In the case of digital editions of texts that are written in a left-to-right writing system, such as Darwin’s English-language On the origin of species, tension arises between the naturalness of placing each witness in its own row, to support continuous left-to-write reading (Table I), and the fact that after a fairly small number of words the display must either scroll horizontally (which users notoriously find less comfortable than vertical scrolling[22]) or wrap blocks of text that consist of several lines.[23] Arranging the witnesses in columns mitigates these limitations, but not without introducing its own complications:

-

As long as the number of witnesses is not large, arranging the witnesses in columns removes the need for horizontal scrolling, which is desirable from the perspective of the user experience (UX). Some editions, though, will require more witnesses than can comfortably be displayed across the screen without horizontal scrolling, which means that arranging the witnesses in columns is not a universal remedy for the inconvenience of horizontal scrolling.

-

One disadvantage of arranging the witnesses as columns is that it changes the word-to-word reading direction. In the case of the Darwin example, we are used to reading English-language texts horizontally, moving our focus down and to the left margin only when no more room remains on the current horizontal physical line. Arranging the witnesses in columns narrows those physical lines, with the result that reading a specific witness entails reading individual words horizontally while reading consecutive words entirely vertically. This is a not a familiar layout for reading English-language texts continuously.

Reducing repetition in alignment tables

An alignment table, whatever its orientation, involves a large (and often very large) amount of repetition. Unnecessary repetition during data entry creates opportunities for user error and unnecessary repetition in information modeling increases storage space.[24] At the same time, repetition is not necessarily undesirable for communicating information, and the focus of this report is primarily on visualization, and not on modeling or processing. Users most naturally recognize pieces of information as related when they are physically close to one another,[25] when they are similar in some way,[26] and when they appear inside the same boundary or container.[27] For these reasons, repeating words in each witness in an alignment table where they occur makes it easier in some ways for readers to perceive and understand the content of the individual witnesses.

It is possible in some circumstances to remove repetition in an alignment table by merging cells where adjacent witnesses contain the same readings. Table III, below, is informationally equivalent to Table II, above, but it removes variation by merging cells horizontally where witnesses share a reading.

Table III

From Charles Darwin, On the origin of species

| 1859 | 1860 | 1861 | 1866 | 1869 | 1872 |

|---|---|---|---|---|---|

| The | |||||

| result | results | ||||

| of | |||||

| the | |||||

| various, | |||||

| quite | |||||

| unknown, | |||||

| or | |||||

| but | |||||

| dimly | |||||

| seen | understood | ||||

| laws | |||||

| of | |||||

| variation | |||||

| is | are | ||||

| infinitely | |||||

| complex | |||||

| and | |||||

| diversified. | |||||

An obvious limitation of this approach is that it is not possible to merge cells that are not adjacent to one another. In Table III all readings that are shared by witnesses happen to be shared by witnesses that are adjacent in the table (and adjacent chronologically, since the columns are arranged by date of publication), but On the origin of species also contains readings that are shared by witnesses that are not chronologically consecutive. There is no consistent ordering of the six editions in the tables above that would make all shared readings adjacent, and repeatedly changing the order of the columns to manipulate the adjacency would introduce unwanted cognitive friction by undermining the reader’s spatial memory.[28]

Tokenization and alignment tables

The first stage of the Gothenburg Model, Tokenization, is where

the witness texts are divided into units to be aligned. The default tokenization in

the

release versions of CollateX separates tokens at sequences of whitespace (that is,

divides the text into orthographic words) and also breaks off boundary punctuation

marks

into their own tokens. Users can override this default. The three Darwin tables above

use a custom tokenization rule that separates the text into words on whitespace but

does

not break off boundary punctuation into its own token, so that, for example, the text

of

all witnesses ends with the single token diversified., which includes a

trailing dot, instead of with a sequence of the token diversified (without

the dot) followed by the token . (just a dot).

Separating the input texts into words during tokenization does not require that the

words be reported individually at the fifth and final Gothenburg stage, called

Visualization[29]. CollateX supports a process that it calls

segmentation, which merges adjacent alignment points that share

alignment properties. For example, all witnesses in our Darwin example have the same

first token (The) and same third through fifth tokens (of the

various,), but there are differences in the second token (result

vs results) and the sixth (quite in four witnesses and nothing

in the other two). With segmentation activated, Table I would

look like:

Table IV

From Charles Darwin, On the origin of species

| 1859 | The | result | of the various, | quite | unknown, or | dimly | seen | laws of variation | is | infinitely complex and diversified. | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1860 | The | result | of the various, | quite | unknown, or | dimly | seen | laws of variation | is | infinitely complex and diversified. | |||||||||

| 1861 | The | result | of the various, | quite | unknown, or | dimly | seen | laws of variation | is | infinitely complex and diversified. | |||||||||

| 1866 | The | result | of the various, | quite | unknown, or | dimly | seen | laws of variation | is | infinitely complex and diversified. | |||||||||

| 1869 | The | results | of the various, | unknown, or | but | dimly | understood | laws of variation | are | infinitely complex and diversified. | |||||||||

| 1872 | The | results | of the various, | unknown, or | but | dimly | understood | laws of variation | are | infinitely complex and diversified. | |||||||||

The point of segmentation is that an open alignment point ends and a new one begins not with every new token, but only when the agreement pattern among witnesses changes. In this example the alignment-point columns alternate between those that show full agreement (columns 1, 3, 5, 7, 9, and 11) and those that show variation or indel situations (columns 2, 4, 6, 8, 10). It will not always be the case that columns will alternate in this way; for example, if there are two adjacent alignment points that both show variation, but with different patterns of agreement among witnesses, the two will be output consecutively.

An alignment table with segmentation can arrange the witnesses either in rows, as in Table IV, above, or in columns, as in Table V, below:

Table V

From Charles Darwin, On the origin of species

| 1859 | 1860 | 1861 | 1866 | 1869 | 1872 |

|---|---|---|---|---|---|

| The | The | The | The | The | The |

| result | result | result | result | results | results |

| of the various, | of the various, | of the various, | of the various, | of the various, | of the various, |

| quite | quite | quite | quite | ||

| unknown, or | unknown, or | unknown, or | unknown, or | unknown, or | unknown, or |

| but | but | ||||

| dimly | dimly | dimly | dimly | dimly | dimly |

| seen | seen | seen | seen | understood | understood |

| laws of variation | laws of variation | laws of variation | laws of variation | laws of variation | laws of variation |

| is | is | is | is | are | are |

| infinitely complex and diversified. | infinitely complex and diversified. | infinitely complex and diversified. | infinitely complex and diversified. | infinitely complex and diversified. | infinitely complex and diversified. |

Regardless of the orientation of the table, it is also possible (with this example, but not universally) to combine the merged or shared readings with segmentation, as in:

Table VI

From Charles Darwin, On the origin of species

| 1859 | 1860 | 1861 | 1866 | 1869 | 1872 |

|---|---|---|---|---|---|

| The | |||||

| result | results | ||||

| of the various, | |||||

| quite | |||||

| unknown, or | |||||

| but | |||||

| dimly | |||||

| seen | understood | ||||

| laws of variation | |||||

| is | are | ||||

| infinitely complex and diversified. | |||||

As we said earlier, merging cells where witnesses share a reading is possible only with adjacent cells, which means that it is a useful visualization only where all shared readings are shared by consecutive witnesses. That pattern occurs in the example above, but it it not the case elsewhere in On the origin of species.

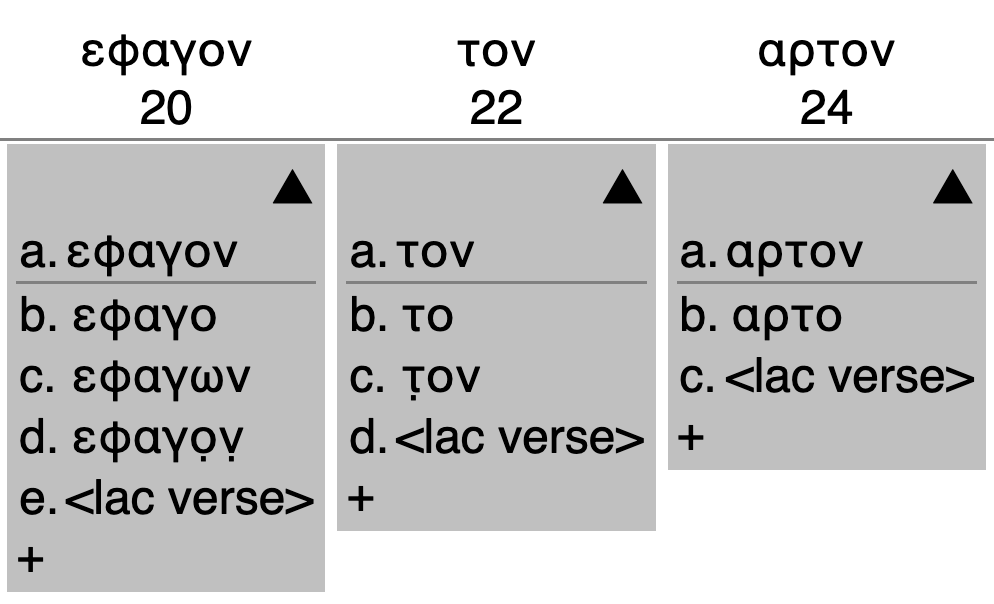

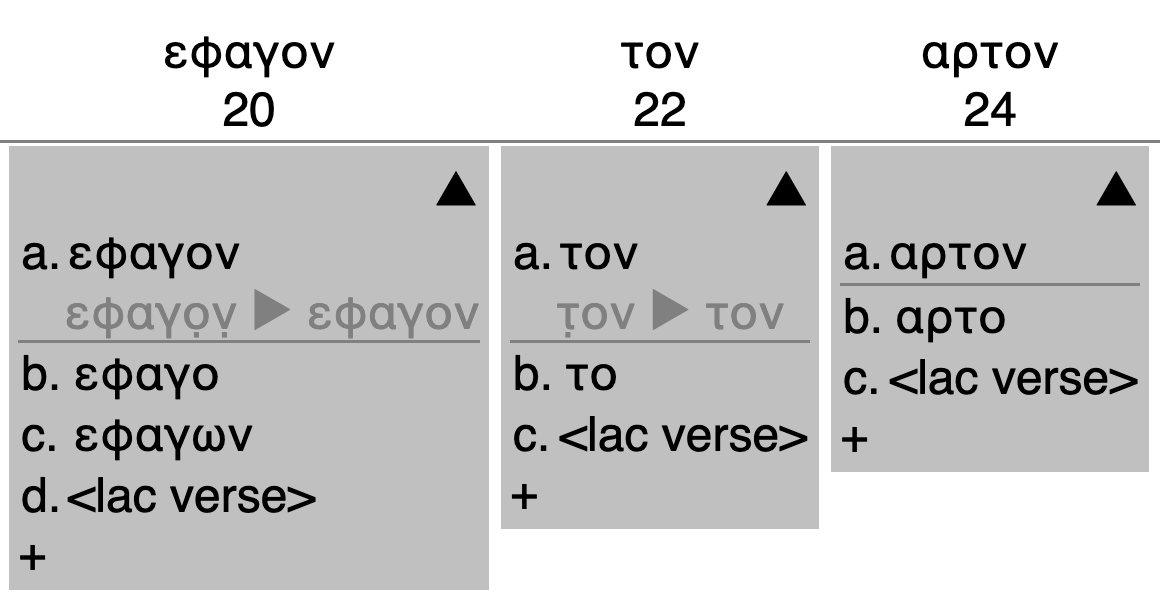

Single-column alignment table

A modification of the alignment table to deal with fact that shared readings can be merged visually only when the witnesses are adjacent in the table is the single-column alignment table. This visualization divides the output the same way as the segmentation examples, above—that is, it starts a new alignment point when the pattern of agreement among witnesses changes. As the name implies, though, instead of rendering different witnesses in their own columns and merging adjacent ones, it displays the readings for an alignment point in a list within a single column, e.g.:

Table VII

From Charles Darwin, On the origin of species

| No | Readings |

|---|---|

| 1 |

|

| 2 |

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| 7 |

|

| 8 |

|

| 9 |

|

| 10 |

|

| 11 |

|

The organization of the Readings column looks familiar because it is identical to a positive critical apparatus in that it groups and records the readings of all witnesses, and not only those that diverge from the editor’s preferred reading. In this case, for reasons discussed above, there is no dynamic critical text, and although we could select a copy text (such as the first edition as chronologically primary or the last as Darwin’s final, and therefore most experienced, expression of his ideas), there is no lost original to imagine and (re)construct. With that said, if we were to select one witness as primary, to be presented consecutively, the Readings column could be synchronized with it automatically and rendered as either a positive critical apparatus (as is) or a negative critical apparatus (by removing the sigla for the copy text and witnesses that agree with it from the apparatus entries).

We found the single-column alignment table useful during development because it provided the same information about an individual alignment point as we would find in a row of Table VI, except that the single-column alignment table could also record the agreement of witnesses that were not consecutive in chronological or any other consistent order, which is a feature that cannot be expressed in an alignment table. At the same time, although the single-column alignment table provides a useful representation of a single alignment point, it is difficult to read consecutively. All of the information needed to reconstruct the full, continuous text of any witness is present, but because of unpredictable layout and gaps in witnesses, the visual flow through a single witness is inconsistent, interrupted, and distracting. Insofar as the single-column alignment table is ultimately just a positive critical apparatus without a main text, it is not surprising that it reproduces the challenges of using a critical apparatus to read a single witness continuously, and it does so without the continuous and legible critical text that accompanies a traditional critical apparatus.

The best text

in an alignment table

Unlike with critical apparatus layout, which foregrounds the editor’s assessment of the best reading by placing it—and only it—in the main text, the transcription and interlinear publication of all witnesses does not automatically include an editorial judgment about which reading to prefer at moments of variation. To incorporate editorial assessment, and not just transcription, into an interlinear collation editors can include, in parallel with the actual textual witnesses, their own determination of a best reading. In Figure 3 (from Ostrowski 2003), above, the black rows represent transcriptions from manuscript witnesses, the red row at the bottom represents the editor’s dynamic critical text, and the blue rows represent critical texts published by other editors. This arrangement makes it easy to see at a glance where the witnesses agree or disagree with one another, which readings the editor considers most authoritative at each location, and how other editors evaluated the same variation to arrive at their decisions about which readings should be incorporated into the critical text.

An interlinear edition overcomes many of the intellectual and cognitive limitations of a critical apparatus, but at the expense of being practical only with a fairly small number of witnesses because the difficulty of seeing the patterns of agreement grows as the number of witnesses in the edition increases. A related consideration, at least with respect to paper publication, is that an interlinear collation incorporates a large amount of repetition or redundancy, which increases the size (and therefore also the production cost) of the edition. For example, the paper edition of Ostrowski 2003, with approximately ten witnesses and editions, fills three volumes that contain a total of approximately 2800 8-1/2 x 11 pages and occupy approximately eleven inches of shelf space.[30]

Redundant repetition is sometimes regarded instinctively as undesirable because by definition it contributes no information that is not already available in a different form. In the case of visualization, though, repetition that may be informationally redundant may nonetheless contribute to the rhetorical effectiveness of the edition. For that reason, repetition is not automatically a weakness that should be avoided in a visualization; it is, instead, a communicative resource with costs and benefits that must be assessed on their own terms.

Alignment table summary

Ordering challenges: Even when the number of witnesses is not large, an interlinear collation raises questions about how to order them. On the one hand, ordering the witnesses identically throughout the edition enables the reader to memorize their relative and absolute positions quickly, avoiding the cognitive friction that would arise from having to read the sigla carefully at every line to verify which readings go with which witnesses. On the other hand, it would be easier to see which witnesses share readings if those witnesses were adjacent to one another, and in that case the groupings (that is, the grouping-dependent orders) might vary at different locations in the edition. We find consistent order easier to understand, even when it means that not all shared readings will be rendered in adjacent or merged cells. In Ostrowski 2003, for example, the witnesses observe a consistent order and are grouped according to overall patterns of agreement suggested by a stemma codicum, even though that means that sometimes witnesses that share readings may be separated from one another visually by text from other witnesses.[31]

Repetition challenges: An alignment table that does not merge witnesses, and that instead repeats readings for each witness in which they appear (such as Table I, above), makes it easy to read any individual witness continuously. At the same time, not merging adjacent cells where witnesses share a reading means that the reader has to determine at every alignment point which witnesses agree with which others. How easy that is depends on the visual similarity of the readings. For example, readings of different lengths may be recognized easily as different, while readings of the same length may require closer inspection and consideration.

Separating the recording of variation from its evaluation: Insofar as an alignment table contains an affirmative statement about what each witness says (or doesn’t say) at every alignment point, it avoids the selectivity that can prevent readers from forming their own assessments of an editor’s decision about whether two witnesses attest a significant difference. The continuous text above a critical apparatus necessarily presents a privileged reading, either as a dynamic critical text or as a best witness selected as a copy text. Because an alignment table presents a legible continuous view of every witness, it does not automatically have a single privileged text (whether a dynamic critical text or a best witness). The editor of an alignment table may incorporate a dynamic critical text by entering it alignment point by alignment point, in parallel with the witness data, as in Figure 3.

Comparing alignment tables and critical apparatus: Our (somewhat subjective) experience has been that:

-

An alignment table makes it easy to read the continuous text of any witness, but harder to see which witnesses agree or disagree at a particular location. A critical apparatus makes it easier to see the patterns of agreement and variation, but harder to read any the text of witness continuously except the base text.

-

With a small number of witnesses an alignment table is more informative and easier to understand than a critical apparatus.

-

Both a critical apparatus and an alignment table quickly become difficult to read and understand as the number of witnesses increases, but an alignment table becomes challenging sooner than a critical apparatus. Because an alignment table is much more verbose than a critical apparatus, it also becomes impossible to represent on a single screen or page much sooner than is the case with a critical apparatus.

Graphic visualizations

Variant graph

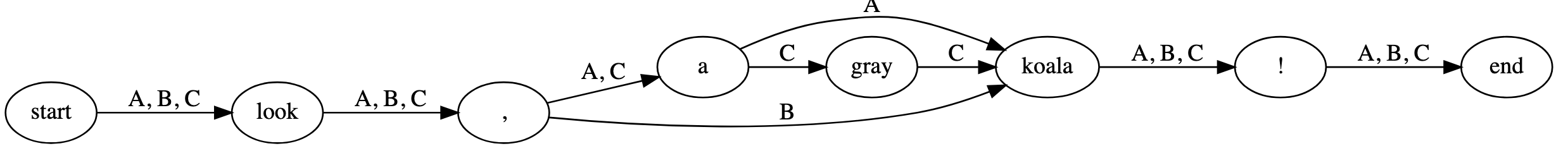

The model used internally for recording the alignment of witnesses in current releases of CollateX is based on the variant graph, a structure popularized in Schmidt and Colomb 2009 after having been introduced almost half a century earlier. An SVG representation of the variant graph is also the principal graphic output format available in CollateX.

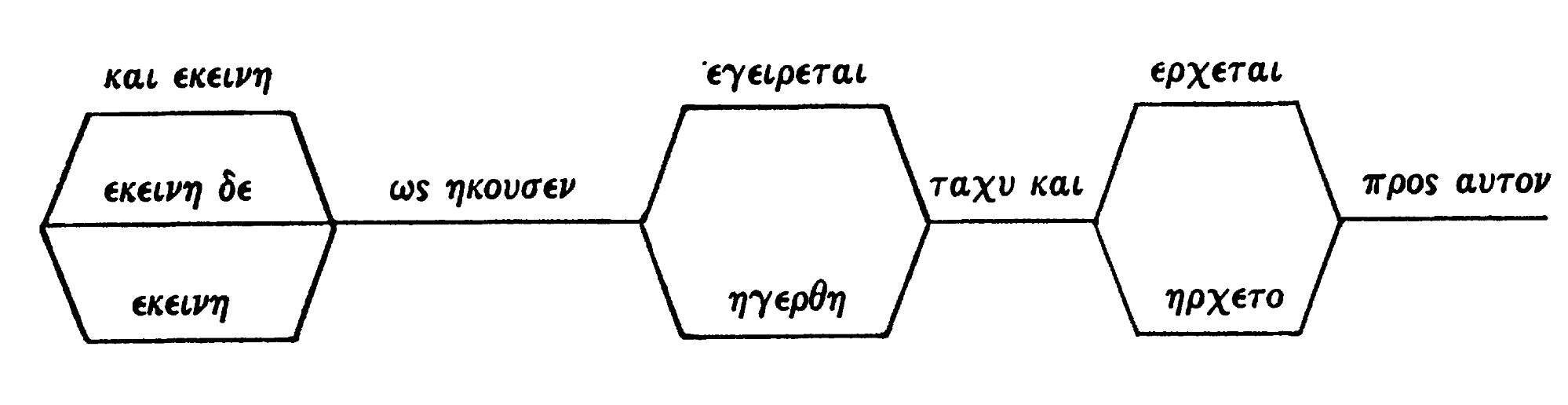

Variation-unit (Colwell and Tune 1964)

The earliest discussion of the variant graph as both model and visualization of which we are aware is Colwell and Tune 1964, which appears not to have been cited subsequently in relevant literature until its rediscovery by Elisa Nury and Elena Spadini (Nury and Spadini 2020, p. 7), who reproduce the example below:

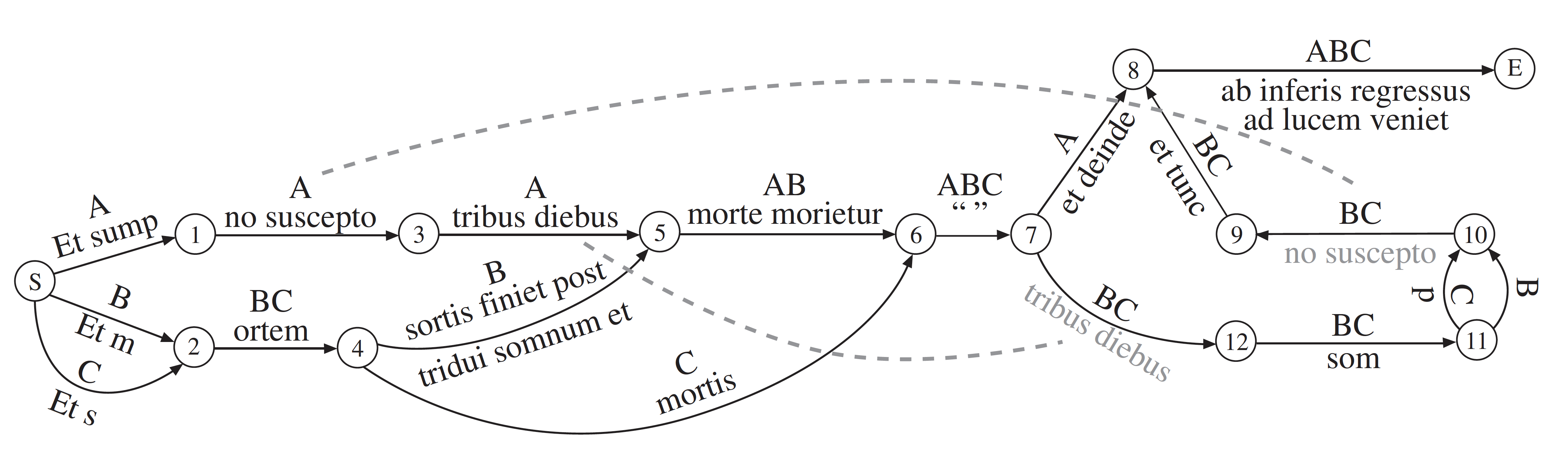

Figure 4: Variant graph (Colwell and Tune 1964, p. 254)

Colwell and Tune 1964 uses the term variation-unit to describe a location where not all witnesses agree.[32] Their illustration records the text of the readings on what graph theory would call the edges, with no information recorded on the nodes. The discussion in their article leaves no doubt that they are also tracking, for each variation-unit, which readings are attested in which witnesses, although they do not include witness identifiers in their illustration.

Our term alignment point, discussed above, is not the same as the Colwell and Tune 1964 variation-unit because an alignment point includes both locations with variation and locations where all witnesses agree, while the variation-unit in Colwell and Tune 1964 refers only to locations where witnesses diverge. In Figure 4, then, there are three variation-units but six alignment points. The focus on locations with variation matters in Colwell and Tune 1964 because the authors propose that variation-units be counted to explore and assess relationships among witnesses, and most of their article focuses on principles for classifying and evaluating types of variant readings as part of the text-critical process.[33]

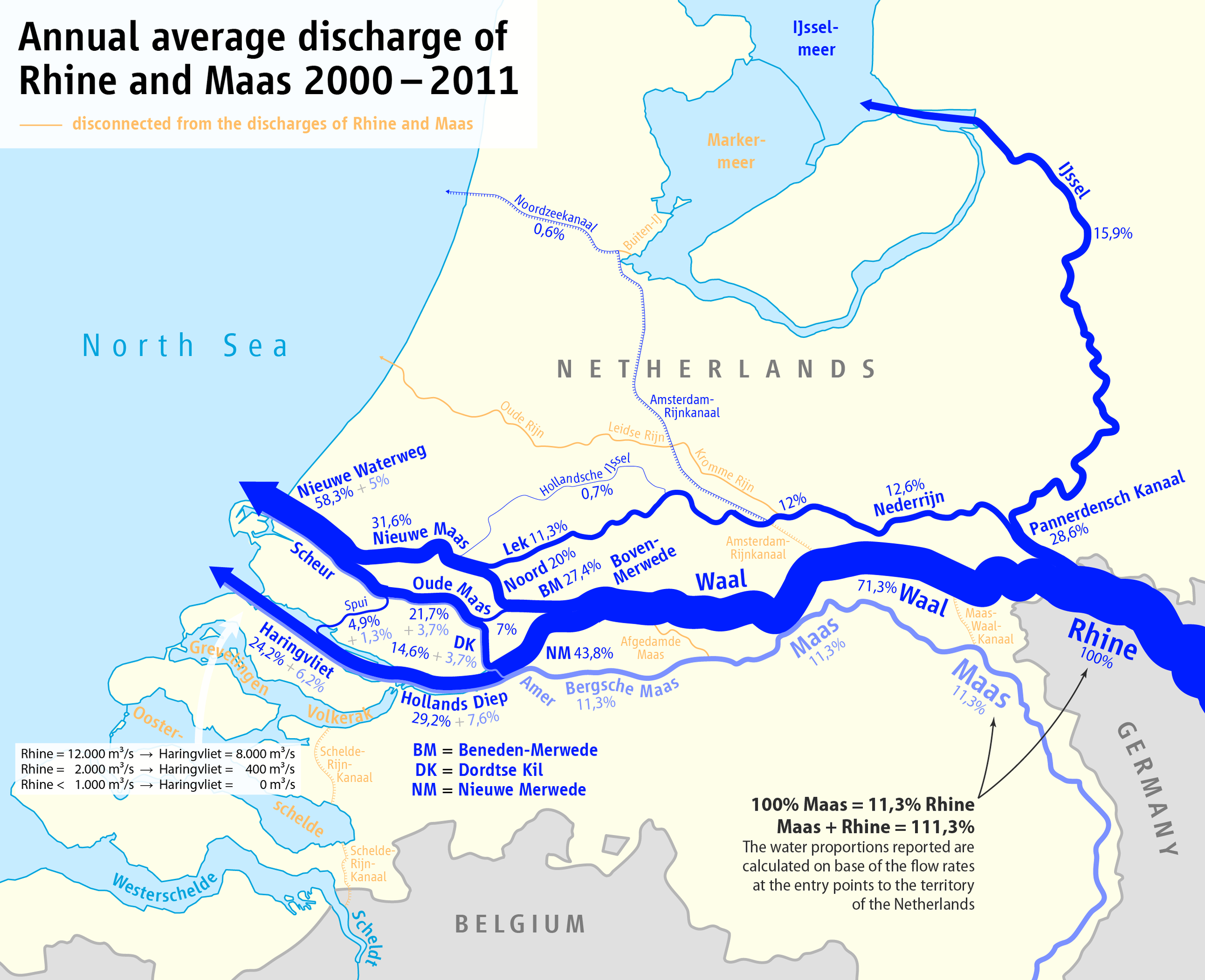

Rhine Delta (Sperberg-McQueen 1989)

The next appearance of the variant graph that we have been able to locate is Sperberg-McQueen 1989, which is also mentioned in passing in Nury and Spadini 2020 (p 7, fn 19). Sperberg-McQueen 1989 does not include any images (the write-up originated as a two-page conference abstract), but it describes the confluence and divergence of readings as analogous to the branches of a river delta, adopting the label Rhine Delta for the model. The illustration below shows how the Rhine (and Meuse) split into multiple channels, some of which may then merge or continue to divide:

Figure 5: Rhine–Meuse delta[34]

Annual average discharge of the Rhine and Meuse 2000-11

Under the term Rhine Delta, Sperberg-McQueen introduces many features and properties of the variant graph that serve as the focus of later work by others:

In this non-linear model, the multiple versions of a text are imagined not as so many parallel, non-intersecting lines, but as curves that intersect, run together for a while, and then split apart again, like the channels in a river delta. Unlike the channels of most river deltas, the versions of a text often merge again after splitting. The data structure takes its name from one riverine delta where such reunion of the channels does occur; I have christened it the

Rhine Deltastructure. Unlike the two-dimensional model of complex texts, this structure stores passages in which all versions agree only once; it is thus more economical of space. It also records the agreements and divergences of manuscripts structurally, which makes the task of preparing a critical apparatus a much simpler computational task.Formally, the Rhine Delta structure is a directed graph, each node of which is labeled with one token of the text and with the symbols of the manuscripts which contain that token. Each arc linking two tokens is labeled with the symbols of the manuscripts in which the two tokens follow each other. There is a single starting node and a single ending node. If one follows all the arcs labeled with the symbol of a specific manuscript, one visits, in turn, nodes representing each token of that manuscript, in sequence. Passages where all the manuscripts agree are marked by nodes and arcs bearing all the manuscript symbols. Passages where they disagree will have as many paths through the passage as there are manuscript variants.

It can be shown that from this structure we can, for any variant, produce all the conventional views of linear text and perform all the usual operations (deletion, insertion, replacement, travel, search and replace, block move, etc.). Moreover, we can readily generate the various conventional views of complex texts: base text with apparatus, texts in parallel columns, text in parallel horizontal lines. Unlike other methods of handling textual variation, the Rhine Delta has no computational bias toward any single base text state; the user pays no penalty for wishing to view the text in an alternate version, with an apparatus keyed to that version. (Sperberg-McQueen 1989)

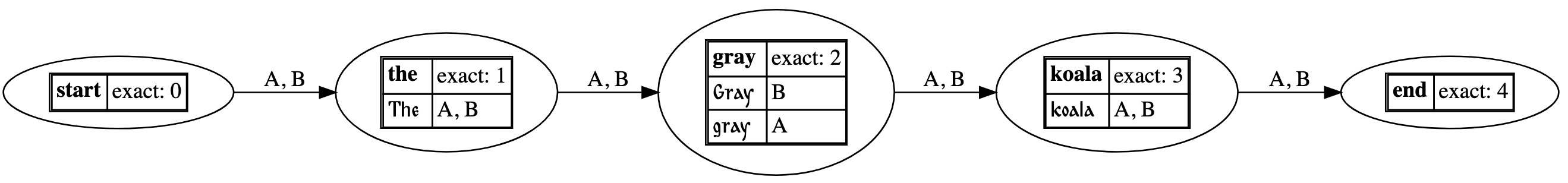

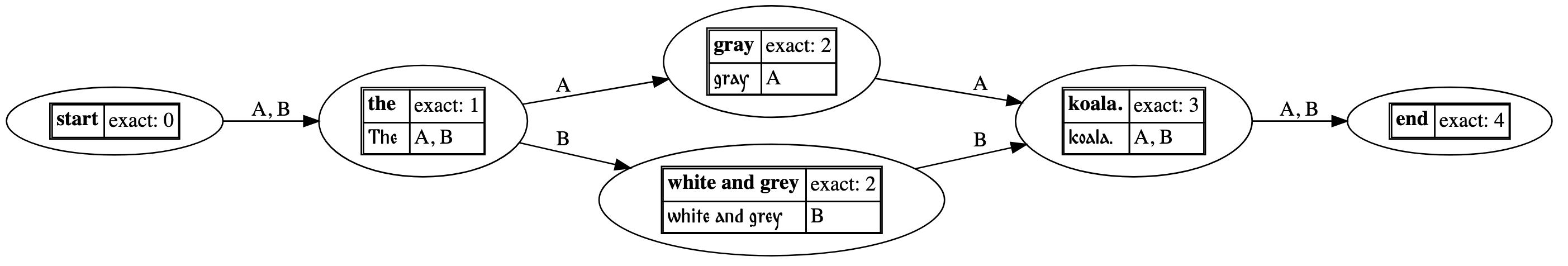

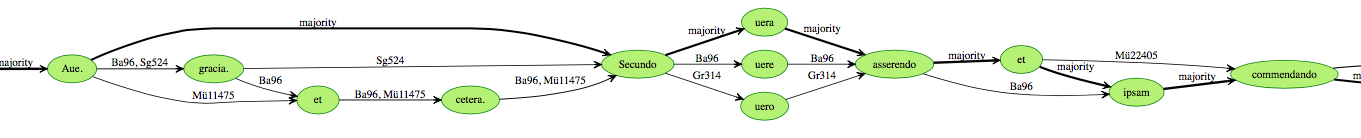

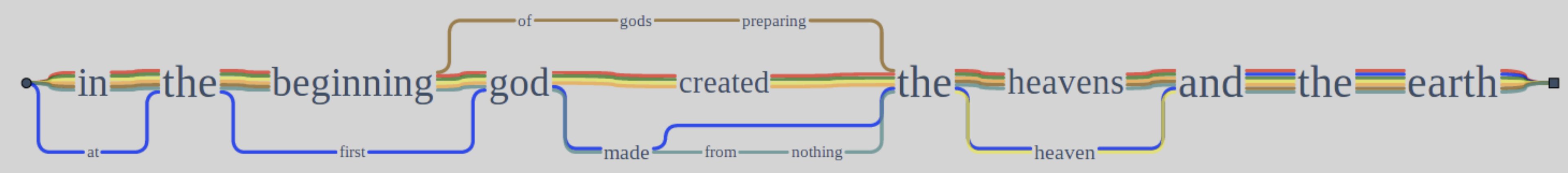

The Rhine Delta model as described in Sperberg-McQueen 1989 records textual readings and witness identifiers on nodes and witness identifiers (alone) on edges, which is also the way information is allocated among nodes and edges in CollateX.[35] The following image is part of the CollateX variant-graph visualization of the data in Table I, but see also the excerpt from Documentation (CollateX) below, which explains how this visualization does not, in fact, expose all token information:

Figure 6: Variant graph (https://collatex.net)

Variant graph (Documentation (CollateX) version, with textual content on nodes)

As far as we can tell, Sperberg-McQueen 1989 appears not to have been discussed in any detail in the literature until the author republished the full text of the abstract himself on his own website after hearing a conference presentation that described a model with very similar properties. Sperberg-McQueen 1989 explains that:[36]

This work came to mind recently when I heard the paper

A Fresh Computational Approach to Textual Variationby Desmond Schmidt and Domenico Fiormonte at the conference Digital Humanities 2006, the first International Conference of the Alliance of Digital Humanities Organizations (ADHO), at the Sorbonne in Paris earlier this month. So I have unearthed the abstract and put it on the Web.

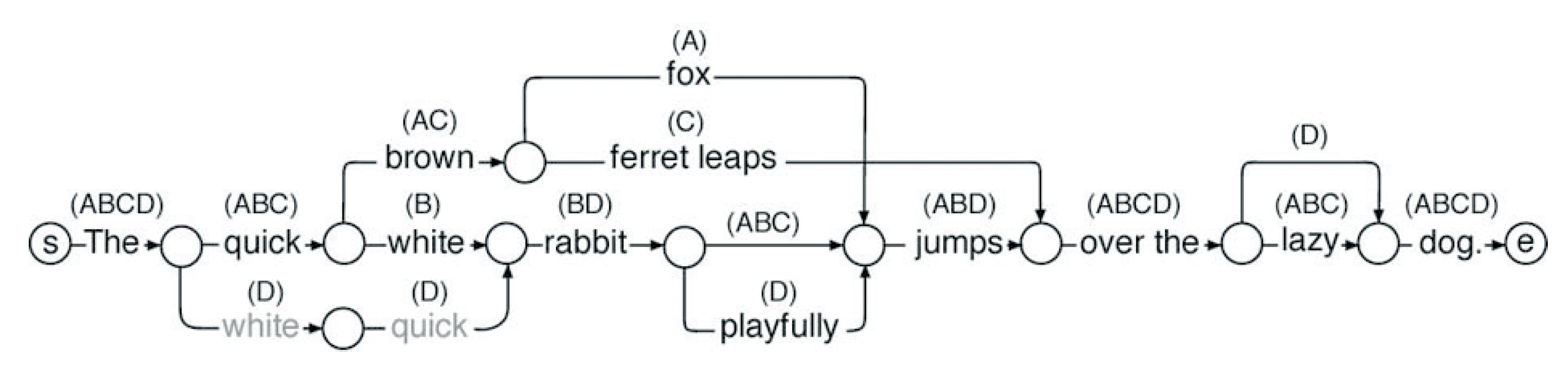

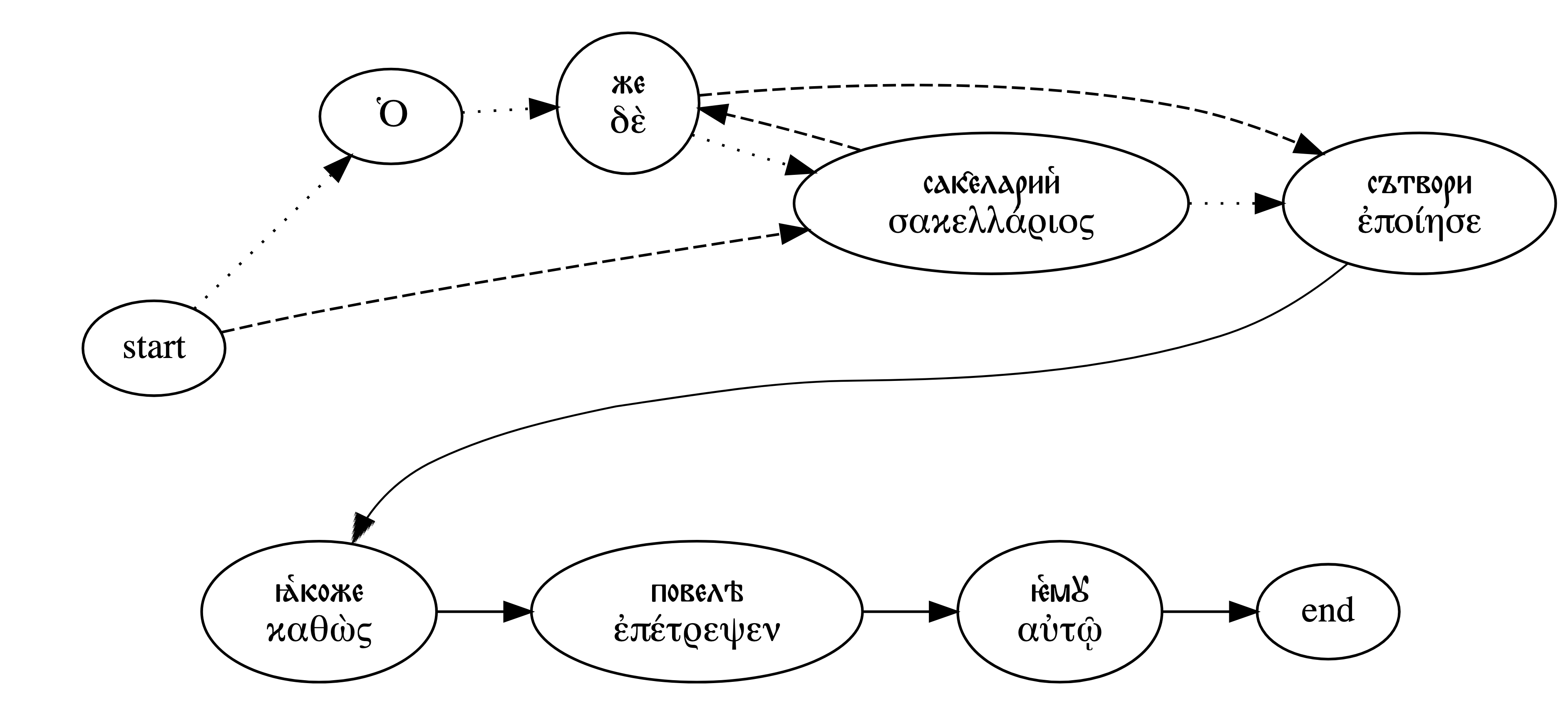

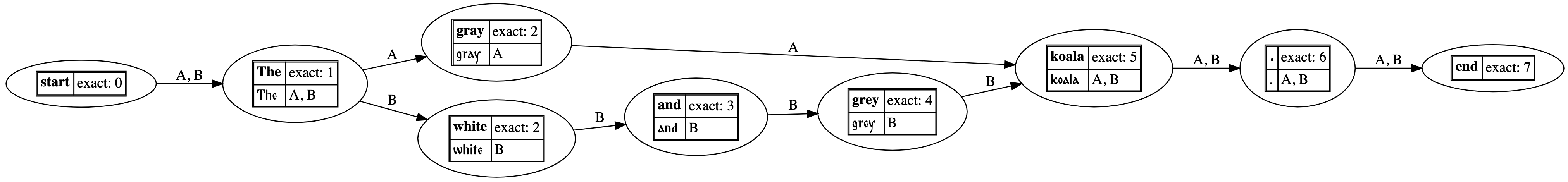

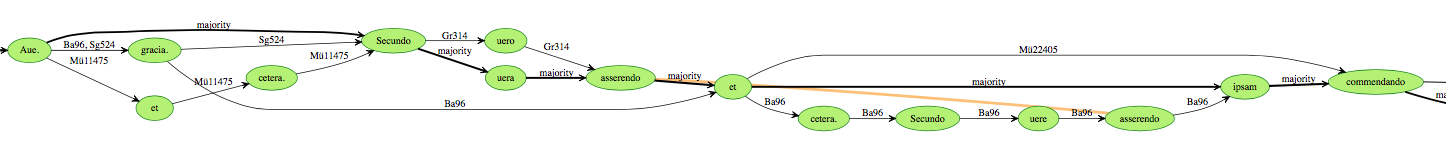

Textgraph (Schmidt and Fiormonte 2006)

The abstract of the 2006 ADHO presentation by Schmidt and Fiormonte mentioned above was published as Schmidt and Fiormonte 2006, where the authors describe and illustrate a variant graph structure that they call a textgraph. The following image is from p. 194 of that conference abstract:

Figure 7: Textgraph (Schmidt and Fiormonte 2006, p. 194)

Textgraph is the term in Schmidt and Fiormonte 2006 for what is called a variant graph in Schmidt and Colomb 2009

The features of the textgraph in Schmidt and Fiormonte 2006 are largely the same as those of the variant graph in Schmidt and Colomb 2009, discussed immediately below.

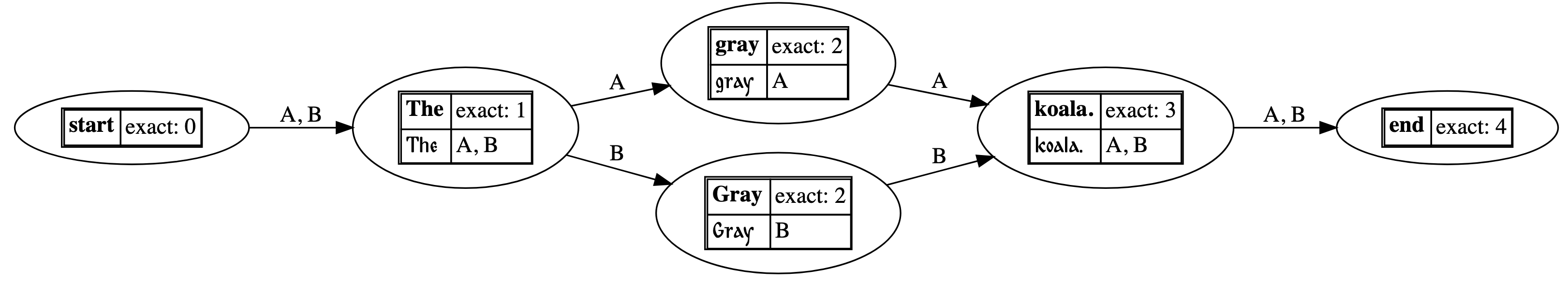

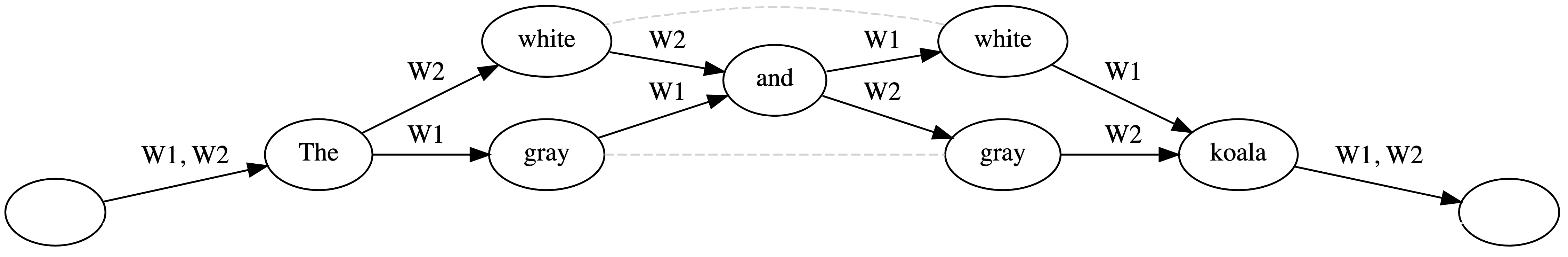

Variant graph (Schmidt and Colomb 2009)

The first use we have been able to find of the term variant graph is in Schmidt and Colomb 2009, which presents the same general model as Schmidt and Fiormonte 2006, but in greater detail and with more explanation. The following variant graph image is from Schmidt and Colomb 2009, p. 510:

Figure 8: Variant graph (Schmidt and Colomb 2009, p. 510)

Variant graph (Schmidt and Colomb 2009 version, with textual content on edges)

Schmidt and Colomb 2009 emphasizes many of the same appealing features of the variant graph as a model as Sperberg-McQueen 1989: it reduces redundancy (see, for example, the extensive repetition in Table I), it permits the concise representation of textual editing operations (§3.4, pp. 503–04), and it supports specific computational operations on the graph itself (reading a single version, searching a multi-version text, comparing two versions, determining what is a variant of what, and creating and editing (§5, pp. 508–10)). The algorithm in Schmidt and Colomb 2009 for creating and editing a variant graph is progressive in the sense in which that term is traditionally used in multiple-sequence alignment, that is, it incorporates one singleton witness at a time into the graph.

The representation of the variant graph in Schmidt and Colomb 2009

puts both textual content and witness identifiers on the edges of the graph. The Start

and End nodes, indicated by circled S

and E

, represent the

starting and ending point of a traversal. There is exactly one path from start to

end

for each witness, which can be traversed by following the edges labeled for that

witness. The dotted lines represent transposition edges; they function as references

(the gray text is a copy of the black text with which it is connected by a tranposition

edge) and are not part of any traversal.

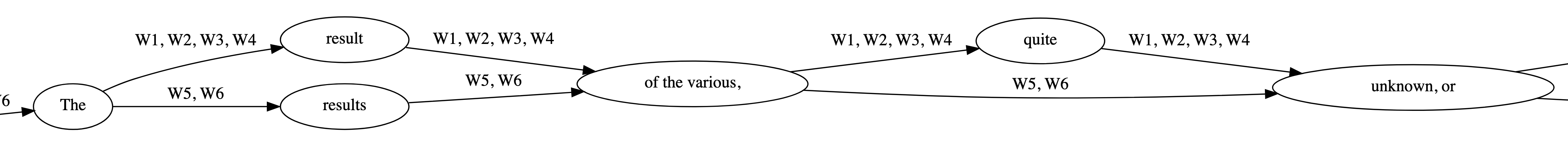

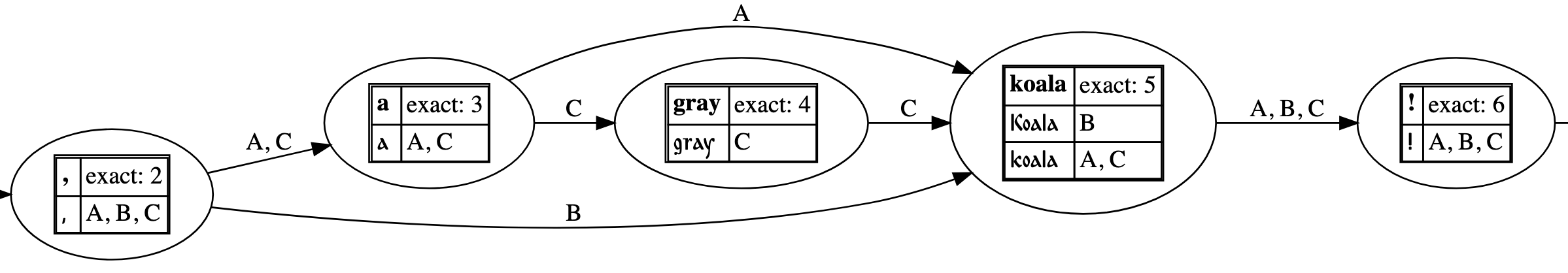

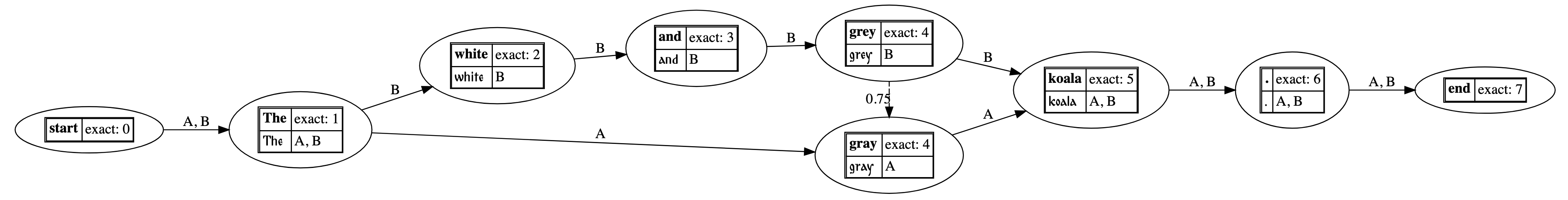

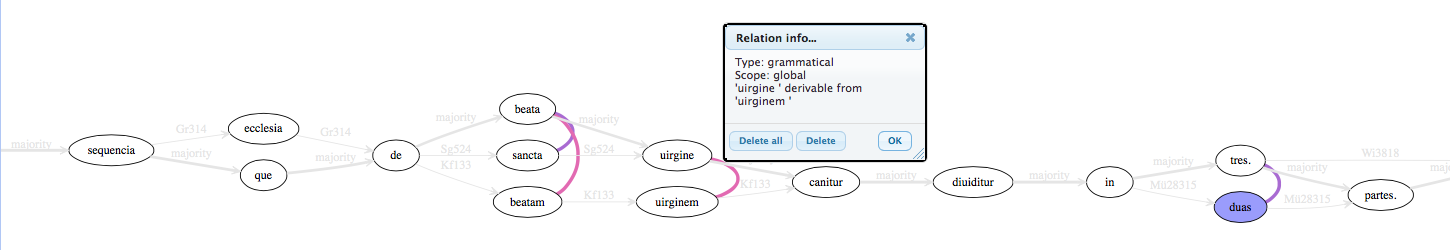

The variant graph as a model in CollateX (Documentation (CollateX))

As mentioned above, the CollateX variant graph, similarly to the earlier Rhine Delta model and unlike the model in Schmidt and Colomb 2009, stores the tokens that contain textual readings on the nodes of the graph, and the only information that the Rhine Delta model and CollateX store on the edges is witness identifiers. Schmidt and Colomb 2009 do not mention this difference; the lone reference to Sperberg-McQueen 1989 in Schmidt and Colomb 2009 reads, in its entirety:

Such a structure is intuitively suited to the description of digital text, and something like it has been proposed at least once before in this context, but was abandoned apparently because it could not be efficiently expressed in markup (Sperberg-McQueen, 1989).

It is possible to transform either of the two representations (text on nodes vs text on edges) to the other automatically, which means that they can be implemented in ways that are informationally equivalent, but the difference nonetheless merits attention from a software-engineering perspective; see the discussion below.

CollateX was developed under the auspices of the EU Interedition research program in 2009, with Ronald Haentjens Dekker and Gregor Middell as the two project leads. (About (CollateX)) Middell brought Schmidt and Colomb 2009 to Haentjens Dekker’s attention (neither developer knew about Sperberg-McQueen 1989 at the time), they recognized the variant graph as a useful model of textual variation, and they modified what they found in Schmidt and Colomb 2009 to move the tokens off the edges and onto the nodes. Insofar as edges in graph theory express relationships between nodes, putting no information on the nodes and all information on the edges would reduce the nodes to nothing but meeting places for edges, with no information or properties of their own, which makes the meaning of the nodes opaque.[37] But the developers of CollateX also had a more specific reason for putting the witness content on the nodes: the tokens that represent witness content in CollateX are complex objects with multiple properties, and not just string values. As Middell explains in Documentation (CollateX) (text in square brackets has been added):

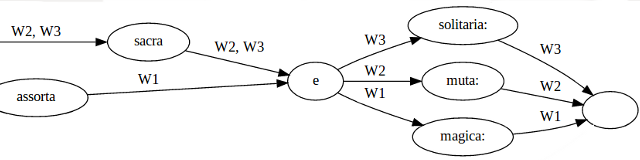

In order to account for the separation of concerns laid out above [the five stages of the Gothenburg Model], CollateX’[s] implementation of Schmidt’s model adjusted the latter slightly. Instead of labelling the edges of a variant graph with two attributes—the content as well as the sigils [witness identifiers] of text versions containing it—the edges of variant graphs in CollateX are only labeled with sigil sets. The version’s content segments—in the form of partial token sequences—have been moved to the nodes/vertices. The ending of the example graph then looks like this (with sigils being mapped from A, B, C to W1, W2, W3):

CollateX’[s] Variant Graph Model

The above illustration does not reveal the internal structure of the graph completely insofar as the nodes’ labels in this figure are a simplification. For instance, the second node in the graph (read from left to right) is labeled

sacra, with the two versions W2 and W3sharing some content. More precisely though and in line with the above definition of tokens and their equivalence relation, W2 and W3 do notshare some content. Instead they both contain a token with the contentsacra, both deemed to be equal according to the definition of a specific token comparator function. In the graphical representation of the variant graph above, this subtle distinction is not made and both tokens are just represented via their common textual content. In CollateX’[s] data model though, this distinction is still relevant and represented: Each node/vertex in a variant graph is not modelled via textual content (as it would be the case when translated directly from Schmidt’s model) but as a set of tokens per node originating from one or more versions, with all tokens in such a set belonging to the same equivalence class.The described change to Schmidt’s model serves mainly two purposes: Firstly, it allows for arbitrary tokens to be compared and their commonalities, differences as well as their sequential order to be represented in a graph structure. Secondly, CollateX’[s] graph model is easy to transform into the tabular representation introduced further above by ranking the graph’s nodes in topological order and aligning tokens which belong to nodes of the same rank.

Where transpositions occur (not in the example above), the CollateX variant graph represents them with dotted lines. This corresponds to a similar special type of edge between nodes that contain the same textual content in Schmidt and Colomb 2009, where it is represented by a dashed line (see the example at Figure 8). The use of a special type of edge avoids cycles when traversing the graph according to its principal edges.

The variant graph as a visualization in CollateX

The utility of the variant graph in modeling has been confirmed by the successful use of CollateX in projects, where the variant graph functions as the internal model of the result of the alignment process, which can then be rendered visually as a graph (as in Figure 6, above) or transformed into an alternative visualization format (such as the alignment table in Table I, above). The focus of the present report, however, is not on the variant graph as a model, but on its utility as a final-form graphic visualization that communicates alignment and variation information to end-users.

Any visualization of variation, including the textual (and pre-digital) critical apparatus, becomes difficult to read as the extent of the variation grows, and both the critical apparatus and the variant graph manage the volume of information with the help of methods that we can usefully compare to data compression. Both visualization and data compression are transformations of a data set that retain something that matters for a particular purpose while excluding details that don’t matter for that purpose. As the name implies, the purpose of data compression is creating an output file that is smaller (as measured in bytes) than the non-compressed input. The visualization of data typically (although not obligatorily) aims for a smaller presentation size. Smaller presentation in the case of a critical apparatus might entail including only variation that the editor considers significant and choosing a negative apparatus over a positive one. In the case of graphic visualization, the editor includes only selected information with the goal of fitting into a small space (such as a single screen or page) a representation of just what the editor considers important (for a particular purpose) about the data.

Data compression methods are commonly categorized as either lossless or lossy, where the difference is that the exact original data can be restored from lossless—but not from lossy—compression. A critical apparatus that includes only variation that the editor considers significant is lossy because it excludes—completely and irretrievably—information that end-users might consider important. Insofar as a critical edition is often used as a surrogate for original data sources, especially when original manuscript data is not easily available to users of the edition, variation that an editor regards as insignificant is not documented and not recoverable. Choosing a negative apparatus instead of a positive one, on the other hand, is lossless because a negative apparatus comes with an implicit default: the editor asserts that any witness not recorded explicitly in an apparatus entry agrees at that location in all significant features with the continuous reading text (whether dynamic critical text or copy text based on a favored manuscript).